原 【DB宝42】MySQL高可用架构MHA+ProxySQL实现读写分离和负载均衡

Tags: 原创MySQL高可用监控主从复制MHA读写分离负载均衡ProxySQL

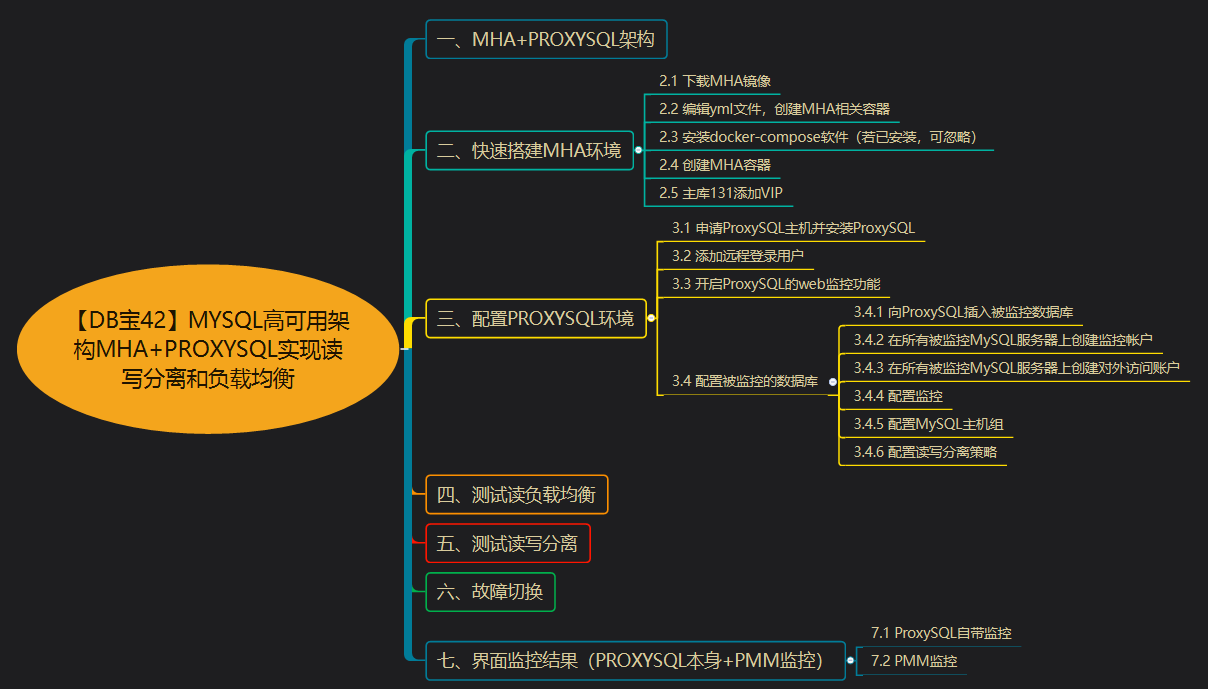

一、MHA+ProxySQL架构

之前发过一篇MHA的文章,介绍了MHA相关的知识和功能测试,连接为:【DB宝19】在Docker中使用MySQL高可用之MHA 。今天这一篇给大家分享一下“MHA+中间件ProxySQL”来实现读写分离+负载均衡的相关知识。

我们都知道,MHA(Master High Availability Manager and tools for MySQL)目前在MySQL高可用方面是一个相对成熟的解决方案,是一套作为MySQL高可用性环境下故障切换和主从提升的高可用软件。它的架构是要求一个MySQL复制集群必须最少有3台数据库服务器,一主二从,即一台充当Master,一台充当备用Master,另一台充当从库。但是,如果不连接任何外部的数据库中间件,那么就会导致所有的业务压力流向主库,从而造成主库压力过大,而2个从库除了本身的IO和SQL线程外,无任何业务压力,会严重造成资源的浪费。因此,我们可以把MHA和ProxySQL结合使用来实现读写分离和负载均衡。所有的业务通过中间件ProxySQL后,会被分配到不同的MySQL机器上。从而,前端的写操作会流向主库,而读操作会被负载均衡的转发到2个从库上。

MHA+ProxySQL架构如下图所示: