原 横向及纵向扩容Greenplum系统实战过程

Tags: 原创GreenPlumscale out(横向扩展)scale up(纵向扩展)扩容增加节点删除mirror缩容

简介

更多理论知识请参考:https://www.xmmup.com/kuoronggreenplumxitongzengjiasegmentjiedian.html

若是组镜像(默认),则至少需要增加2个主机!!!

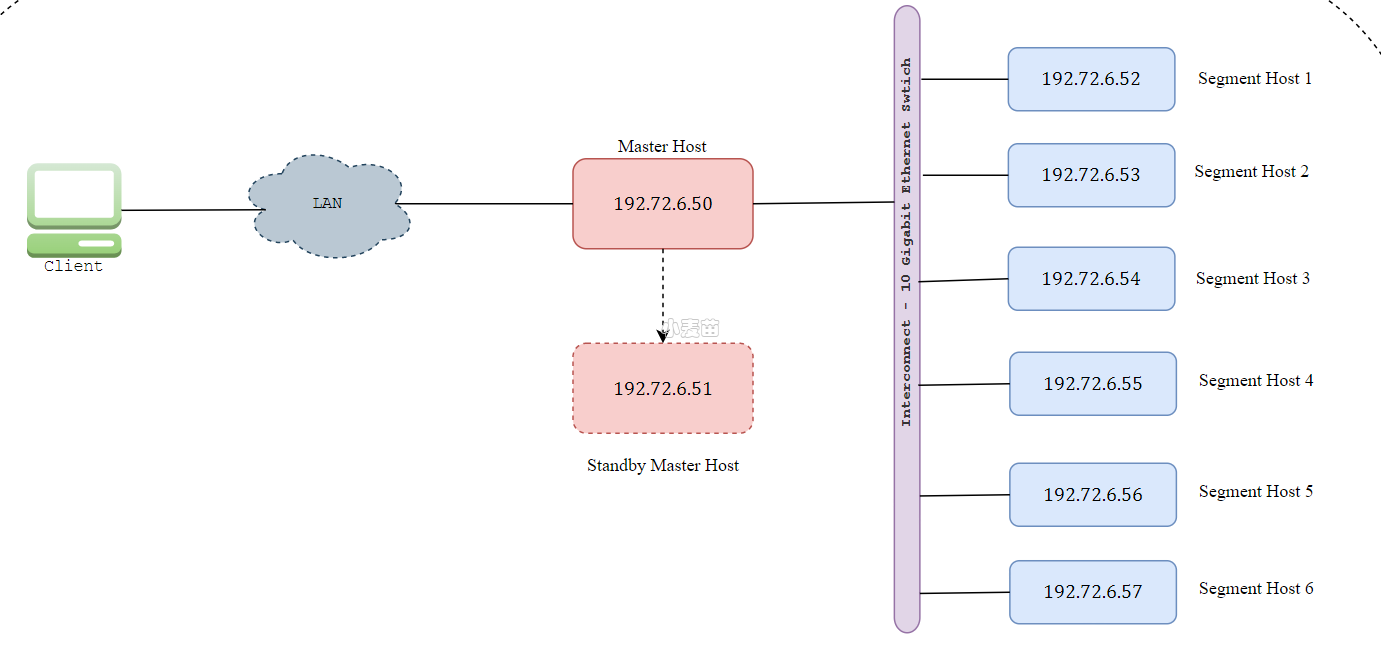

环境介绍

接下来的实验环境参考:https://www.xmmup.com/mppjiagouzhigreenplumdeanzhuangpeizhigaojiban.html

https://www.xmmup.com/liyongqiantaodockerzaidangerongqizhonganzhuanggreenplumduozhujihuanjing.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 | docker rm -f lhrgpdball docker run -itd --name lhrgpdball -h lhrgpdball \ -p 5433:5432 -p 28180:28080 -p 28181:28081 -p 23189:3389 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --restart=always \ --privileged=true lhrbest/gpdball:6.25.1 \ /usr/sbin/init docker exec -it lhrgpdball bash -- 新增2个节点 docker rm -f sdw5 docker run -itd --name sdw5 -h sdw5 \ --net=lhrnw --ip 192.72.6.56 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ --add-host='mdw mdw:192.72.6.50' \ --add-host='smdw smdw:192.72.6.51' \ --add-host='sdw1:192.72.6.52' \ --add-host='sdw2:192.72.6.53' \ --add-host='sdw3:192.72.6.54' \ --add-host='sdw4:192.72.6.55' \ --add-host='sdw5:192.72.6.56' \ --add-host='sdw6:192.72.6.57' \ lhrbest/lhrcentos76:9.2 \ /usr/sbin/init docker rm -f sdw6 docker run -itd --name sdw6 -h sdw6 \ --net=lhrnw --ip 192.72.6.57 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ --add-host='mdw mdw:192.72.6.50' \ --add-host='smdw smdw:192.72.6.51' \ --add-host='sdw1:192.72.6.52' \ --add-host='sdw2:192.72.6.53' \ --add-host='sdw3:192.72.6.54' \ --add-host='sdw4:192.72.6.55' \ --add-host='sdw5:192.72.6.56' \ --add-host='sdw6:192.72.6.57' \ lhrbest/lhrcentos76:9.2 \ /usr/sbin/init [root@lhrgpdball /]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES bce5848f314c lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 11 minutes ago Up 2 minutes sdw6 e414c039fdc2 lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 11 minutes ago Up 2 minutes sdw5 d358e01c0f86 lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes sdw4 e8b3f1a2bd4d lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes sdw3 be22a8e05ff4 lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes sdw2 c682042f585e lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes sdw1 a3aa845d78be lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes 0.0.0.0:5433->5432/tcp, 0.0.0.0:28081->28080/tcp smdw 03254d50b7d6 lhrbest/lhrcentos76:9.2 "/usr/sbin/init" 10 days ago Up 12 minutes 0.0.0.0:5432->5432/tcp, 0.0.0.0:28080->28080/tcp mdw [root@lhrgpdball /]# |

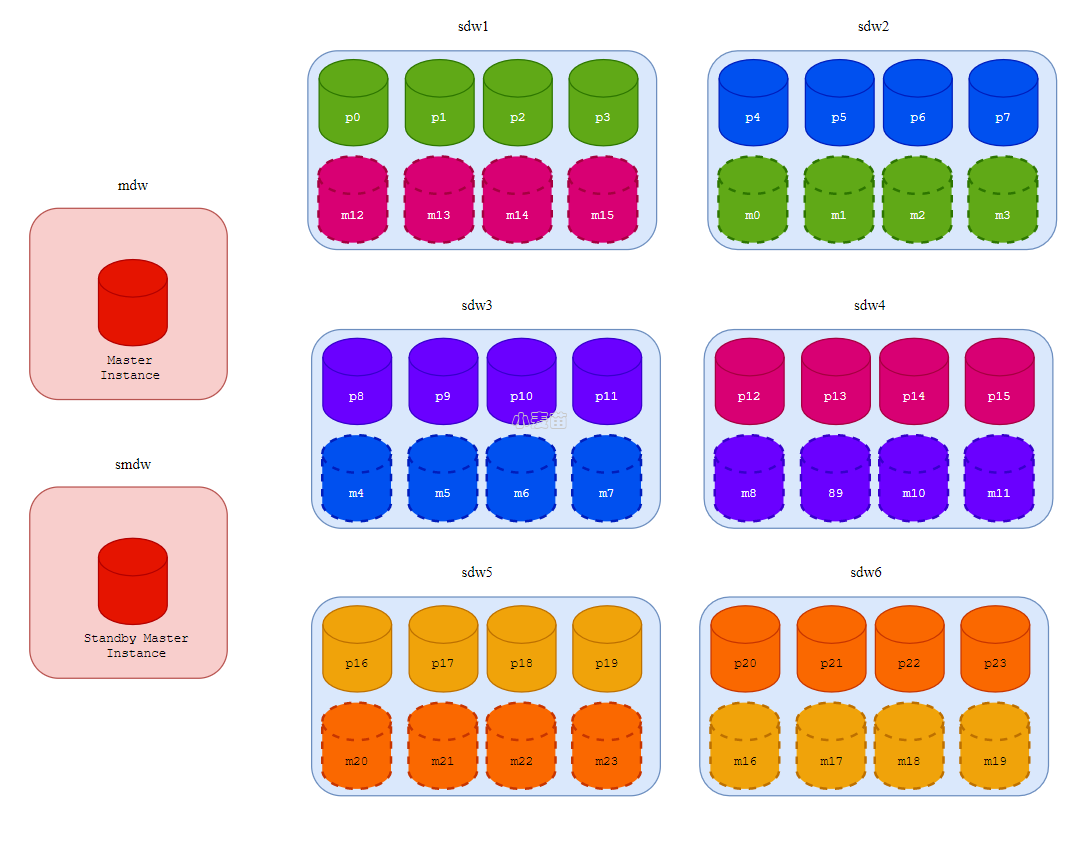

横向扩容(增加节点数)

greenplum横向扩容相当于增加服务器数量,原有服务器节点保持现状,增加服务器存储节点!!!

横向扩容之后的环境:

现有环境

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | postgres=# select * from gp_segment_configuration order by hostname,role desc; dbid | content | role | preferred_role | mode | status | port | hostname | address | datadir ------+---------+------+----------------+------+--------+------+----------+---------+------------------------------------- 1 | -1 | p | p | n | u | 5432 | mdw1 | mdw1 | /opt/greenplum/data/master/gpseg-1 34 | -1 | m | m | s | u | 5432 | mdw2 | mdw2 | /opt/greenplum/data/master/gpseg-1 2 | 0 | p | p | s | u | 6000 | sdw1 | sdw1 | /opt/greenplum/data/primary/gpseg0 3 | 1 | p | p | s | u | 6001 | sdw1 | sdw1 | /opt/greenplum/data/primary/gpseg1 4 | 2 | p | p | s | u | 6002 | sdw1 | sdw1 | /opt/greenplum/data/primary/gpseg2 5 | 3 | p | p | s | u | 6003 | sdw1 | sdw1 | /opt/greenplum/data/primary/gpseg3 31 | 13 | m | m | s | u | 7001 | sdw1 | sdw1 | /opt/greenplum/data/mirror/gpseg13 33 | 15 | m | m | s | u | 7003 | sdw1 | sdw1 | /opt/greenplum/data/mirror/gpseg15 32 | 14 | m | m | s | u | 7002 | sdw1 | sdw1 | /opt/greenplum/data/mirror/gpseg14 30 | 12 | m | m | s | u | 7000 | sdw1 | sdw1 | /opt/greenplum/data/mirror/gpseg12 6 | 4 | p | p | s | u | 6000 | sdw2 | sdw2 | /opt/greenplum/data/primary/gpseg4 9 | 7 | p | p | s | u | 6003 | sdw2 | sdw2 | /opt/greenplum/data/primary/gpseg7 7 | 5 | p | p | s | u | 6001 | sdw2 | sdw2 | /opt/greenplum/data/primary/gpseg5 8 | 6 | p | p | s | u | 6002 | sdw2 | sdw2 | /opt/greenplum/data/primary/gpseg6 19 | 1 | m | m | s | u | 7001 | sdw2 | sdw2 | /opt/greenplum/data/mirror/gpseg1 20 | 2 | m | m | s | u | 7002 | sdw2 | sdw2 | /opt/greenplum/data/mirror/gpseg2 21 | 3 | m | m | s | u | 7003 | sdw2 | sdw2 | /opt/greenplum/data/mirror/gpseg3 18 | 0 | m | m | s | u | 7000 | sdw2 | sdw2 | /opt/greenplum/data/mirror/gpseg0 11 | 9 | p | p | s | u | 6001 | sdw3 | sdw3 | /opt/greenplum/data/primary/gpseg9 10 | 8 | p | p | s | u | 6000 | sdw3 | sdw3 | /opt/greenplum/data/primary/gpseg8 13 | 11 | p | p | s | u | 6003 | sdw3 | sdw3 | /opt/greenplum/data/primary/gpseg11 12 | 10 | p | p | s | u | 6002 | sdw3 | sdw3 | /opt/greenplum/data/primary/gpseg10 23 | 5 | m | m | s | u | 7001 | sdw3 | sdw3 | /opt/greenplum/data/mirror/gpseg5 22 | 4 | m | m | s | u | 7000 | sdw3 | sdw3 | /opt/greenplum/data/mirror/gpseg4 24 | 6 | m | m | s | u | 7002 | sdw3 | sdw3 | /opt/greenplum/data/mirror/gpseg6 25 | 7 | m | m | s | u | 7003 | sdw3 | sdw3 | /opt/greenplum/data/mirror/gpseg7 15 | 13 | p | p | s | u | 6001 | sdw4 | sdw4 | /opt/greenplum/data/primary/gpseg13 14 | 12 | p | p | s | u | 6000 | sdw4 | sdw4 | /opt/greenplum/data/primary/gpseg12 17 | 15 | p | p | s | u | 6003 | sdw4 | sdw4 | /opt/greenplum/data/primary/gpseg15 16 | 14 | p | p | s | u | 6002 | sdw4 | sdw4 | /opt/greenplum/data/primary/gpseg14 29 | 11 | m | m | s | u | 7003 | sdw4 | sdw4 | /opt/greenplum/data/mirror/gpseg11 26 | 8 | m | m | s | u | 7000 | sdw4 | sdw4 | /opt/greenplum/data/mirror/gpseg8 28 | 10 | m | m | s | u | 7002 | sdw4 | sdw4 | /opt/greenplum/data/mirror/gpseg10 27 | 9 | m | m | s | u | 7001 | sdw4 | sdw4 | /opt/greenplum/data/mirror/gpseg9 (34 rows) |

新增节点配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 | hostnamectl set-hostname sdw5 sed -i s/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config setenforce 0 ll /lib64/security/pam_limits.so echo "session required /lib64/security/pam_limits.so" >> /etc/pam.d/login cat >> /etc/security/limits.conf <<"EOF" * soft nofile 655350 * hard nofile 655350 * soft nproc 655350 * hard nproc 655350 gpadmin soft priority -20 EOF sed -i 's/4096/655350/' /etc/security/limits.d/20-nproc.conf cat /etc/security/limits.d/20-nproc.conf cat >> /etc/sysctl.conf <<"EOF" fs.file-max=9000000 fs.inotify.max_user_instances = 1000000 fs.inotify.max_user_watches = 1000000 kernel.pid_max=4194304 kernel.shmmax = 4398046511104 kernel.shmmni = 4096 kernel.shmall = 4000000000 kernel.sem = 32000 1024000000 500 32000 vm.overcommit_memory=1 vm.overcommit_ratio=95 net.ipv4.ip_forward=1 vm.swappiness=20 vm.dirty_background_bytes = 0 vm.dirty_background_ratio = 5 vm.dirty_bytes = 0 vm.dirty_expire_centisecs = 600 vm.dirty_ratio = 10 vm.dirty_writeback_centisecs = 100 vm.vfs_cache_pressure = 500 vm.min_free_kbytes = 2097152 EOF sysctl -p groupadd -g 530 gpadmin useradd -g 530 -u 530 -m -d /home/gpadmin -s /bin/bash gpadmin chown -R gpadmin:gpadmin /home/gpadmin echo "gpadmin:lhr" | chpasswd |

所有节点更新

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | -- 所有节点更新 cat >> /etc/hosts <<"EOF" 192.72.6.50 mdw 192.72.6.51 smdw 192.72.6.52 sdw1 192.72.6.53 sdw2 192.72.6.54 sdw3 192.72.6.55 sdw4 192.72.6.56 sdw5 192.72.6.57 sdw6 EOF su - gpadmin mkdir -p /home/gpadmin/conf/ cat > /home/gpadmin/conf/all_hosts <<"EOF" mdw smdw sdw1 sdw2 sdw3 sdw4 sdw5 sdw6 EOF cat > /home/gpadmin/conf/seg_hosts <<"EOF" sdw1 sdw2 sdw3 sdw4 sdw5 sdw6 EOF |

配置互信

master配置:

1 2 3 4 5 6 7 | ./sshUserSetup.sh -user root -hosts "mdw smdw sdw1 sdw2 sdw3 sdw4 sdw5 sdw6" -advanced -noPromptPassphrase ./sshUserSetup.sh -user gpadmin -hosts "mdw smdw sdw1 sdw2 sdw3 sdw4 sdw5 sdw6" -advanced -noPromptPassphrase chmod 600 /home/gpadmin/.ssh/config -- 校验 gpssh -f /home/gpadmin/conf/all_hosts date |

新增节点安装GP软件和gpcc软件

sdw5和sdw6操作: