合 使用dataX全量迁移Oracle到MySQL

有关dataX更多内容请参考:https://www.xmmup.com/alikaiyuanetlgongjuzhidataxhedatax-webjieshao.html

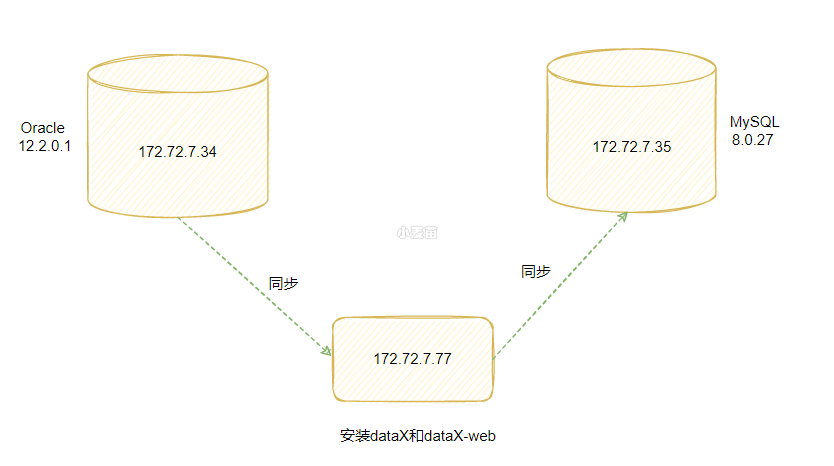

环境准备

Oracle和MySQL环境准备

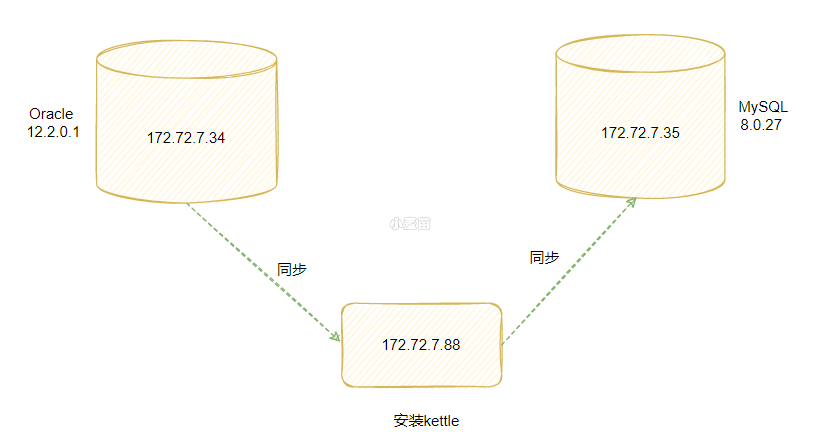

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | -- 创建专用网络 docker network create --subnet=172.72.7.0/24 ora-network -- oracle 压测工具 docker pull lhrbest/lhrdbbench:1.0 docker rm -f lhrdbbench docker run -d --name lhrdbbench -h lhrdbbench \ --net=ora-network --ip 172.72.7.26 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/lhrdbbench:1.0 \ /usr/sbin/init -- Oracle 12c docker rm -f lhrora1221 docker run -itd --name lhrora1221 -h lhrora1221 \ --net=ora-network --ip 172.72.7.34 \ -p 1526:1521 -p 3396:3389 \ --privileged=true \ lhrbest/oracle_12cr2_ee_lhr_12.2.0.1:2.0 init -- mysql docker rm -f mysql8027 docker run -d --name mysql8027 -h mysql8027 -p 3418:3306 \ --net=ora-network --ip 172.72.7.35 \ -v /etc/mysql/mysql8027/conf:/etc/mysql/conf.d \ -e MYSQL_ROOT_PASSWORD=lhr -e TZ=Asia/Shanghai \ mysql:8.0.27 cat > /etc/mysql/mysql8027/conf/my.cnf << "EOF" [mysqld] default-time-zone = '+8:00' log_timestamps = SYSTEM skip-name-resolve log-bin server_id=80273418 character_set_server=utf8mb4 default_authentication_plugin=mysql_native_password EOF mysql -uroot -plhr -h 172.72.7.35 create database lhrdb; -- 业务用户 CREATE USER lhr identified by lhr; alter user lhr identified by lhr; GRANT DBA to lhr ; grant SELECT ANY DICTIONARY to lhr; GRANT EXECUTE ON SYS.DBMS_LOCK TO lhr; -- 启动监听 vi /u01/app/oracle/product/11.2.0.4/dbhome_1/network/admin/listener.ora lsnrctl start lsnrctl status |

dataX环境准备

1 2 3 4 5 6 7 8 9 10 | docker rm -f lhrdataX docker run -itd --name lhrdataX -h lhrdataX \ --net=ora-network --ip 172.72.7.77 \ -p 9527:9527 -p 39389:3389 -p 33306:3306 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true lhrbest/datax:2.0 \ /usr/sbin/init http://172.17.0.4:9527/index.html admin/123456 |

Oracle端数据初始化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 | -- 源端数据初始化 /usr/local/swingbench/bin/oewizard -s -create -c /usr/local/swingbench/wizardconfigs/oewizard.xml -create \ -version 2.0 -cs //172.72.7.34/lhrsdb -dba "sys as sysdba" -dbap lhr -dt thin \ -ts users -u lhr -p lhr -allindexes -scale 0.0001 -tc 16 -v -cl col TABLE_NAME format a30 SELECT a.table_name,a.num_rows FROM dba_tables a where a.OWNER='LHR' ; select object_type,count(*) from dba_objects where owner='LHR' group by object_type; select object_type,status,count(*) from dba_objects where owner='LHR' group by object_type,status; select sum(bytes)/1024/1024 from dba_segments where owner='LHR'; -- 检查键是否正确:https://www.xmmup.com/ogg-01296-biaoyouzhujianhuoweiyijiandanshirengranshiyongquanbulielaijiexixing.html -- 否则OGG启动后,会报错:OGG-01296、OGG-06439、OGG-01169 Encountered an update where all key columns for target table LHR.ORDER_ITEMS are not present. select owner, constraint_name, constraint_type, status, validated from dba_constraints where owner='LHR' and VALIDATED='NOT VALIDATED'; select 'alter table lhr.'||TABLE_NAME||' enable validate constraint '||CONSTRAINT_NAME||';' from dba_constraints where owner='LHR' and VALIDATED='NOT VALIDATED'; -- 删除外键 SELECT 'ALTER TABLE LHR.'|| D.TABLE_NAME ||' DROP constraint '|| D.CONSTRAINT_NAME||';' FROM DBA_constraints d where owner='LHR' and d.CONSTRAINT_TYPE='R'; sqlplus lhr/lhr@172.72.7.34:1521/lhrsdb @/oggoracle/demo_ora_create.sql @/oggoracle/demo_ora_insert.sql SQL> select * from tcustmer; CUST NAME CITY ST ---- ------------------------------ -------------------- -- WILL BG SOFTWARE CO. SEATTLE WA JANE ROCKY FLYER INC. DENVER CO -- 创建2个clob和blob类型的表 sqlplus lhr/lhr@172.72.7.34:1521/lhrsdb @/oggoracle/demo_ora_lob_create.sql exec testing_lobs; select * from lhr.TSRSLOB; drop table IMAGE_LOB; CREATE TABLE IMAGE_LOB ( T_ID VARCHAR2 (5) NOT NULL, T_IMAGE BLOB, T_CLOB CLOB ); -- 插入blob文件 CREATE OR REPLACE DIRECTORY D1 AS '/home/oracle/'; grant all on DIRECTORY D1 TO PUBLIC; CREATE OR REPLACE NONEDITIONABLE PROCEDURE IMG_INSERT(TID VARCHAR2, FILENAME VARCHAR2, name VARCHAR2) AS F_LOB BFILE; B_LOB BLOB; BEGIN INSERT INTO IMAGE_LOB (T_ID, T_IMAGE,T_CLOB) VALUES (TID, EMPTY_BLOB(),name) RETURN T_IMAGE INTO B_LOB; F_LOB := BFILENAME('D1', FILENAME); DBMS_LOB.FILEOPEN(F_LOB, DBMS_LOB.FILE_READONLY); DBMS_LOB.LOADFROMFILE(B_LOB, F_LOB, DBMS_LOB.GETLENGTH(F_LOB)); DBMS_LOB.FILECLOSE(F_LOB); COMMIT; END; / BEGIN IMG_INSERT('1','1.jpg','xmmup.com'); IMG_INSERT('2','2.jpg','www.xmmup.com'); END; / select * from IMAGE_LOB; ----- oracle所有表 SQL> select * from tab; TNAME TABTYPE CLUSTERID ------------------------------ ------- ---------- ADDRESSES TABLE CARD_DETAILS TABLE CUSTOMERS TABLE IMAGE_LOB TABLE INVENTORIES TABLE LOGON TABLE ORDERENTRY_METADATA TABLE ORDERS TABLE ORDER_ITEMS TABLE PRODUCTS VIEW PRODUCT_DESCRIPTIONS TABLE PRODUCT_INFORMATION TABLE PRODUCT_PRICES VIEW TCUSTMER TABLE TCUSTORD TABLE TSRSLOB TABLE TTRGVAR TABLE WAREHOUSES TABLE 18 rows selected. SELECT COUNT(*) FROM LHR.ADDRESSES UNION ALL SELECT COUNT(*) FROM LHR.CARD_DETAILS UNION ALL SELECT COUNT(*) FROM LHR.CUSTOMERS UNION ALL SELECT COUNT(*) FROM LHR.IMAGE_LOB UNION ALL SELECT COUNT(*) FROM LHR.INVENTORIES UNION ALL SELECT COUNT(*) FROM LHR.LOGON UNION ALL SELECT COUNT(*) FROM LHR.ORDERENTRY_METADATA UNION ALL SELECT COUNT(*) FROM LHR.ORDERS UNION ALL SELECT COUNT(*) FROM LHR.ORDER_ITEMS UNION ALL SELECT COUNT(*) FROM LHR.PRODUCT_DESCRIPTIONS UNION ALL SELECT COUNT(*) FROM LHR.PRODUCT_INFORMATION UNION ALL SELECT COUNT(*) FROM LHR.TCUSTMER UNION ALL SELECT COUNT(*) FROM LHR.TCUSTORD UNION ALL SELECT COUNT(*) FROM LHR.TSRSLOB UNION ALL SELECT COUNT(*) FROM LHR.TTRGVAR UNION ALL SELECT COUNT(*) FROM LHR.WAREHOUSES ; COUNT(*) ---------- 281 281 231 4 900724 575 4 424 1642 1000 1000 2 2 1 0 1000 16 rows selected. |

最终,在Oracle端共包括16张表,2个视图,其中2个表TSRSLOB和IMAGE_LOB包括了blob和clob字段。

生成MySQL端DDL语句

可以使用Navicat的数据传输功能或其它工具直接从Oracle端生成MySQL类型的建表语句如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | mysql -uroot -plhr -h 172.72.7.35 -D lhrdb -f < ddl.sql mysql> show tables; +----------------------+ | Tables_in_lhrdb | +----------------------+ | ADDRESSES | | CARD_DETAILS | | CUSTOMERS | | IMAGE_LOB | | INVENTORIES | | LOGON | | ORDERENTRY_METADATA | | ORDERS | | ORDER_ITEMS | | PRODUCT_DESCRIPTIONS | | PRODUCT_INFORMATION | | TCUSTMER | | TCUSTORD | | TSRSLOB | | TTRGVAR | | WAREHOUSES | +----------------------+ 16 rows in set (0.01 sec) |

DDL语句如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 | /* Navicat Premium Data Transfer Source Server : ora12c Source Server Type : Oracle Source Server Version : 120200 Source Host : 192.168.1.35:1526 Source Schema : LHR Target Server Type : MySQL Target Server Version : 80099 File Encoding : 65001 Date: 28/06/2022 15:19:41 */ SET NAMES utf8; SET FOREIGN_KEY_CHECKS = 0; -- ---------------------------- -- Table structure for ADDRESSES -- ---------------------------- DROP TABLE IF EXISTS `ADDRESSES`; CREATE TABLE `ADDRESSES` ( `ADDRESS_ID` decimal(12, 0) NOT NULL, `CUSTOMER_ID` decimal(12, 0) NOT NULL, `DATE_CREATED` datetime NOT NULL, `HOUSE_NO_OR_NAME` varchar(60) NULL, `STREET_NAME` varchar(60) NULL, `TOWN` varchar(60) NULL, `COUNTY` varchar(60) NULL, `COUNTRY` varchar(60) NULL, `POST_CODE` varchar(12) NULL, `ZIP_CODE` varchar(12) NULL, PRIMARY KEY (`ADDRESS_ID`), INDEX `ADDRESS_CUST_IX`(`CUSTOMER_ID` ASC) ); -- ---------------------------- -- Table structure for CARD_DETAILS -- ---------------------------- DROP TABLE IF EXISTS `CARD_DETAILS`; CREATE TABLE `CARD_DETAILS` ( `CARD_ID` decimal(12, 0) NOT NULL, `CUSTOMER_ID` decimal(12, 0) NOT NULL, `CARD_TYPE` varchar(30) NOT NULL, `CARD_NUMBER` decimal(12, 0) NOT NULL, `EXPIRY_DATE` datetime NOT NULL, `IS_VALID` varchar(1) NOT NULL, `SECURITY_CODE` decimal(6, 0) NULL, PRIMARY KEY (`CARD_ID`), INDEX `CARDDETAILS_CUST_IX`(`CUSTOMER_ID` ASC) ); -- ---------------------------- -- Table structure for CUSTOMERS -- ---------------------------- DROP TABLE IF EXISTS `CUSTOMERS`; CREATE TABLE `CUSTOMERS` ( `CUSTOMER_ID` decimal(12, 0) NOT NULL, `CUST_FIRST_NAME` varchar(40) NOT NULL, `CUST_LAST_NAME` varchar(40) NOT NULL, `NLS_LANGUAGE` varchar(3) NULL, `NLS_TERRITORY` varchar(30) NULL, `CREDIT_LIMIT` decimal(9, 2) NULL, `CUST_EMAIL` varchar(100) NULL, `ACCOUNT_MGR_ID` decimal(12, 0) NULL, `CUSTOMER_SINCE` datetime NULL, `CUSTOMER_CLASS` varchar(40) NULL, `SUGGESTIONS` varchar(40) NULL, `DOB` datetime NULL, `MAILSHOT` varchar(1) NULL, `PARTNER_MAILSHOT` varchar(1) NULL, `PREFERRED_ADDRESS` decimal(12, 0) NULL, `PREFERRED_CARD` decimal(12, 0) NULL, PRIMARY KEY (`CUSTOMER_ID`), INDEX `CUST_ACCOUNT_MANAGER_IX`(`ACCOUNT_MGR_ID` ASC), INDEX `CUST_DOB_IX`(`DOB` ASC), INDEX `CUST_EMAIL_IX`(`CUST_EMAIL` ASC) ); -- ---------------------------- -- Table structure for IMAGE_LOB -- ---------------------------- DROP TABLE IF EXISTS `IMAGE_LOB`; CREATE TABLE `IMAGE_LOB` ( `T_ID` varchar(5) NOT NULL, `T_IMAGE` longblob NULL, `T_CLOB` longtext NULL ); -- ---------------------------- -- Table structure for INVENTORIES -- ---------------------------- DROP TABLE IF EXISTS `INVENTORIES`; CREATE TABLE `INVENTORIES` ( `PRODUCT_ID` decimal(6, 0) NOT NULL, `WAREHOUSE_ID` decimal(6, 0) NOT NULL, `QUANTITY_ON_HAND` decimal(8, 0) NOT NULL, PRIMARY KEY (`PRODUCT_ID`, `WAREHOUSE_ID`), INDEX `INV_PRODUCT_IX`(`PRODUCT_ID` ASC), INDEX `INV_WAREHOUSE_IX`(`WAREHOUSE_ID` ASC) ); -- ---------------------------- -- Table structure for LOGON -- ---------------------------- DROP TABLE IF EXISTS `LOGON`; CREATE TABLE `LOGON` ( `LOGON_ID` decimal(65, 30) NOT NULL, `CUSTOMER_ID` decimal(65, 30) NOT NULL, `LOGON_DATE` datetime NULL ); -- ---------------------------- -- Table structure for ORDER_ITEMS -- ---------------------------- DROP TABLE IF EXISTS `ORDER_ITEMS`; CREATE TABLE `ORDER_ITEMS` ( `ORDER_ID` decimal(12, 0) NOT NULL, `LINE_ITEM_ID` decimal(3, 0) NOT NULL, `PRODUCT_ID` decimal(6, 0) NOT NULL, `UNIT_PRICE` decimal(8, 2) NULL, `QUANTITY` decimal(8, 0) NULL, `DISPATCH_DATE` datetime NULL, `RETURN_DATE` datetime NULL, `GIFT_WRAP` varchar(20) NULL, `CONDITION` varchar(20) NULL, `SUPPLIER_ID` decimal(6, 0) NULL, `ESTIMATED_DELIVERY` datetime NULL, PRIMARY KEY (`ORDER_ID`, `LINE_ITEM_ID`), INDEX `ITEM_ORDER_IX`(`ORDER_ID` ASC), INDEX `ITEM_PRODUCT_IX`(`PRODUCT_ID` ASC) ); -- ---------------------------- -- Table structure for ORDERENTRY_METADATA -- ---------------------------- DROP TABLE IF EXISTS `ORDERENTRY_METADATA`; CREATE TABLE `ORDERENTRY_METADATA` ( `METADATA_KEY` varchar(30) NULL, `METADATA_VALUE` varchar(30) NULL ); -- ---------------------------- -- Table structure for ORDERS -- ---------------------------- DROP TABLE IF EXISTS `ORDERS`; CREATE TABLE `ORDERS` ( `ORDER_ID` decimal(12, 0) NOT NULL, `ORDER_DATE` datetime NOT NULL, `ORDER_MODE` varchar(8) NULL, `CUSTOMER_ID` decimal(12, 0) NOT NULL, `ORDER_STATUS` decimal(2, 0) NULL, `ORDER_TOTAL` decimal(8, 2) NULL, `SALES_REP_ID` decimal(6, 0) NULL, `PROMOTION_ID` decimal(6, 0) NULL, `WAREHOUSE_ID` decimal(6, 0) NULL, `DELIVERY_TYPE` varchar(15) NULL, `COST_OF_DELIVERY` decimal(6, 0) NULL, `WAIT_TILL_ALL_AVAILABLE` varchar(15) NULL, `DELIVERY_ADDRESS_ID` decimal(12, 0) NULL, `CUSTOMER_CLASS` varchar(30) NULL, `CARD_ID` decimal(12, 0) NULL, `INVOICE_ADDRESS_ID` decimal(12, 0) NULL, PRIMARY KEY (`ORDER_ID`), INDEX `ORD_CUSTOMER_IX`(`CUSTOMER_ID` ASC), INDEX `ORD_ORDER_DATE_IX`(`ORDER_DATE` ASC), INDEX `ORD_SALES_REP_IX`(`SALES_REP_ID` ASC), INDEX `ORD_WAREHOUSE_IX`(`WAREHOUSE_ID` ASC, `ORDER_STATUS` ASC) ); -- ---------------------------- -- Table structure for PRODUCT_DESCRIPTIONS -- ---------------------------- DROP TABLE IF EXISTS `PRODUCT_DESCRIPTIONS`; CREATE TABLE `PRODUCT_DESCRIPTIONS` ( `PRODUCT_ID` decimal(6, 0) NOT NULL, `LANGUAGE_ID` varchar(3) NOT NULL, `TRANSLATED_NAME` varchar(50) NOT NULL, `TRANSLATED_DESCRIPTION` text NOT NULL, PRIMARY KEY (`PRODUCT_ID`, `LANGUAGE_ID`), UNIQUE INDEX `PRD_DESC_PK`(`PRODUCT_ID` ASC, `LANGUAGE_ID` ASC), INDEX `PROD_NAME_IX`(`TRANSLATED_NAME` ASC) ); -- ---------------------------- -- Table structure for PRODUCT_INFORMATION -- ---------------------------- DROP TABLE IF EXISTS `PRODUCT_INFORMATION`; CREATE TABLE `PRODUCT_INFORMATION` ( `PRODUCT_ID` decimal(6, 0) NOT NULL, `PRODUCT_NAME` varchar(50) NOT NULL, `PRODUCT_DESCRIPTION` text NULL, `CATEGORY_ID` decimal(4, 0) NOT NULL, `WEIGHT_CLASS` decimal(1, 0) NULL, `WARRANTY_PERIOD` longtext NULL, `SUPPLIER_ID` decimal(6, 0) NULL, `PRODUCT_STATUS` varchar(20) NULL, `LIST_PRICE` decimal(8, 2) NULL, `MIN_PRICE` decimal(8, 2) NULL, `CATALOG_URL` varchar(50) NULL, PRIMARY KEY (`PRODUCT_ID`), INDEX `PROD_CATEGORY_IX`(`CATEGORY_ID` ASC), INDEX `PROD_SUPPLIER_IX`(`SUPPLIER_ID` ASC) ); -- ---------------------------- -- Table structure for TCUSTMER -- ---------------------------- DROP TABLE IF EXISTS `TCUSTMER`; CREATE TABLE `TCUSTMER` ( `CUST_CODE` varchar(4) NOT NULL, `NAME` varchar(30) NULL, `CITY` varchar(20) NULL, `STATE` char(2) NULL, PRIMARY KEY (`CUST_CODE`) ); -- ---------------------------- -- Table structure for TCUSTORD -- ---------------------------- DROP TABLE IF EXISTS `TCUSTORD`; CREATE TABLE `TCUSTORD` ( `CUST_CODE` varchar(4) NOT NULL, `ORDER_DATE` datetime NOT NULL, `PRODUCT_CODE` varchar(8) NOT NULL, `ORDER_ID` decimal(65, 30) NOT NULL, `PRODUCT_PRICE` decimal(8, 2) NULL, `PRODUCT_AMOUNT` decimal(6, 0) NULL, `TRANSACTION_ID` decimal(65, 30) NULL, PRIMARY KEY (`CUST_CODE`, `ORDER_DATE`, `PRODUCT_CODE`, `ORDER_ID`) ); -- ---------------------------- -- Table structure for TSRSLOB -- ---------------------------- DROP TABLE IF EXISTS `TSRSLOB`; CREATE TABLE `TSRSLOB` ( `LOB_RECORD_KEY` decimal(65, 30) NOT NULL, `LOB1_CLOB` longtext NULL, `LOB2_BLOB` longblob NULL, PRIMARY KEY (`LOB_RECORD_KEY`) ); -- ---------------------------- -- Table structure for TTRGVAR -- ---------------------------- DROP TABLE IF EXISTS `TTRGVAR`; CREATE TABLE `TTRGVAR` ( `LOB_RECORD_KEY` decimal(65, 30) NOT NULL, `LOB1_VCHAR0` text NULL, `LOB1_VCHAR1` text NULL, `LOB1_VCHAR2` text NULL, `LOB1_VCHAR3` varchar(200) NULL, `LOB1_VCHAR4` text NULL, `LOB1_VCHAR5` text NULL, `LOB1_VCHAR6` varchar(100) NULL, `LOB1_VCHAR7` varchar(250) NULL, `LOB1_VCHAR8` text NULL, `LOB1_VCHAR9` text NULL, `LOB2_VCHAR0` text NULL, `LOB2_VCHAR1` text NULL, `LOB2_VCHAR2` text NULL, `LOB2_VCHAR3` text NULL, `LOB2_VCHAR4` text NULL, `LOB2_VCHAR5` text NULL, `LOB2_VCHAR6` text NULL, `LOB2_VCHAR7` varchar(150) NULL, `LOB2_VCHAR8` text NULL, `LOB2_VCHAR9` varchar(50) NULL, PRIMARY KEY (`LOB_RECORD_KEY`) ); -- ---------------------------- -- Table structure for WAREHOUSES -- ---------------------------- DROP TABLE IF EXISTS `WAREHOUSES`; CREATE TABLE `WAREHOUSES` ( `WAREHOUSE_ID` decimal(6, 0) NOT NULL, `WAREHOUSE_NAME` varchar(35) NULL, `LOCATION_ID` decimal(4, 0) NULL, PRIMARY KEY (`WAREHOUSE_ID`), INDEX `WHS_LOCATION_IX`(`LOCATION_ID` ASC) ); SET FOREIGN_KEY_CHECKS = 1; |

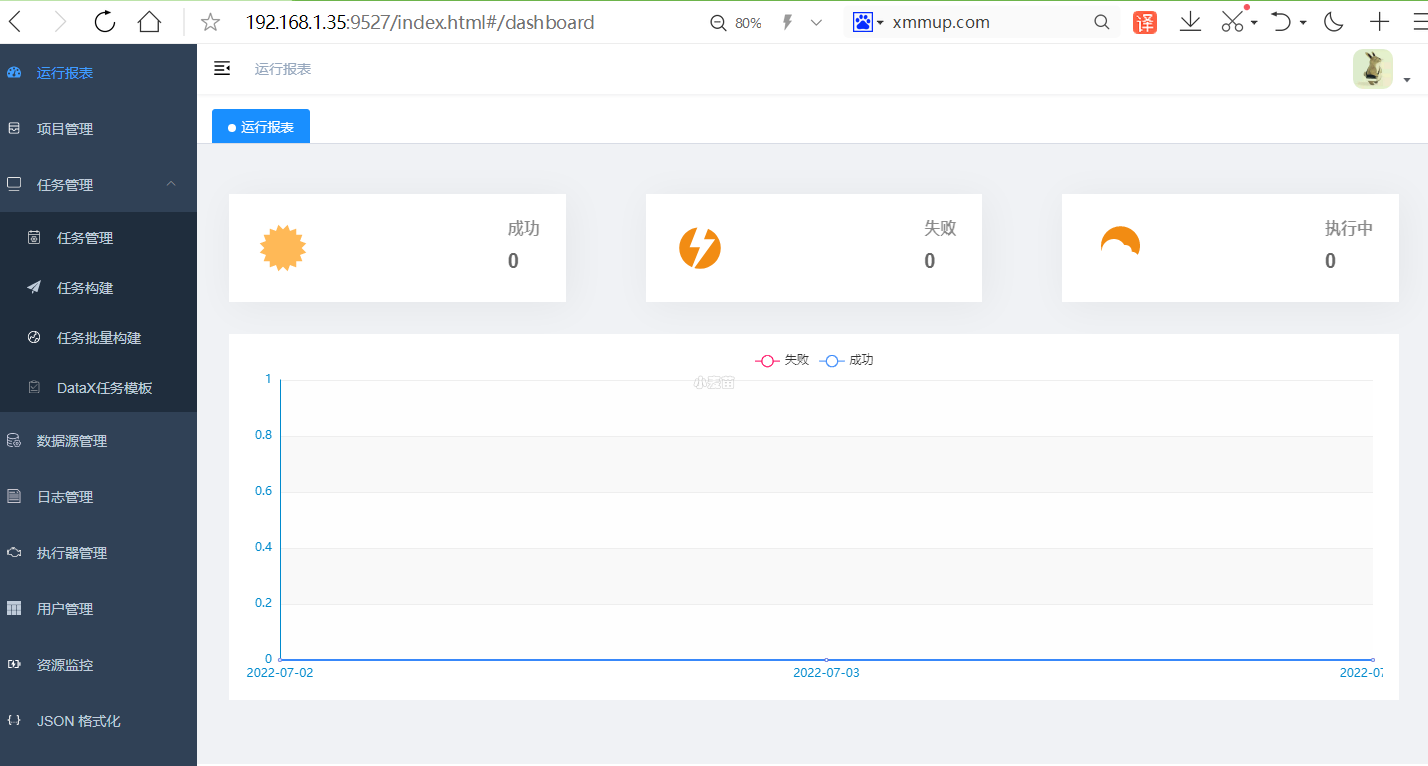

dataX-web配置

1、新增项目

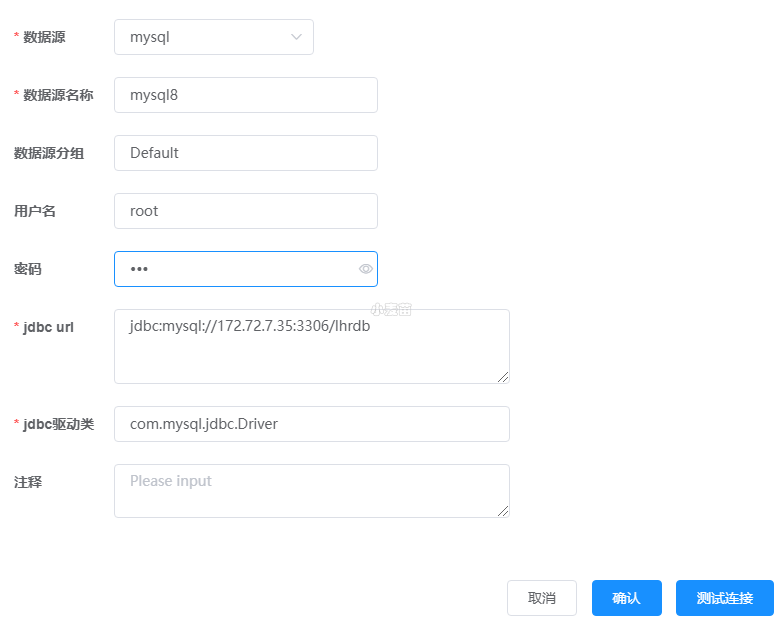

2、新增Oracle和MySQL数据源,并测试通过

1 2 3 4 | jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb jdbc:mysql://172.72.7.35:3306/lhrdb |

请问老师的secureCRT这个配置是怎么设置的