原 使用Promethues+Grafana对Greenplum数据库监控

Tags: 原创GreenPlum监控GrafanaPrometheus发送告警邮件greenplum_exporterPrometheusAlert钉钉告警

- Promethues与Grafana简介

- 1、Prometheus简介

- 2、Grafana简介

- Greenplum监控的实现

- 1、Greenplum的Exporter指标采集器

- 2、支持的监控指标

- 3、使用Grafana绘制一个可视化状态图

- Greenplum监控安装实现

- 安装Promethues

- 安装Greenplum-Expoter

- 配置Promethues

- 安装Grafana可视化工具

- 配置Grafana

- ①、添加promethus数据源

- ②、配置greenplum监控模板图Dashboard

- 监控GreenPlum主机

- 配置告警

- 下载安装alertmanager和PrometheusAlert

- Prometheus 配置alertmanager

- 修改告警模板

- 钉钉告警模板

- 邮件告警模板配置

- 修改告警路由

- 报警规则

- Linux服务器告警

- GreenPlum数据库告警

- 生效

- 结果

- 总结

- 参考

Promethues与Grafana简介

1、Prometheus简介

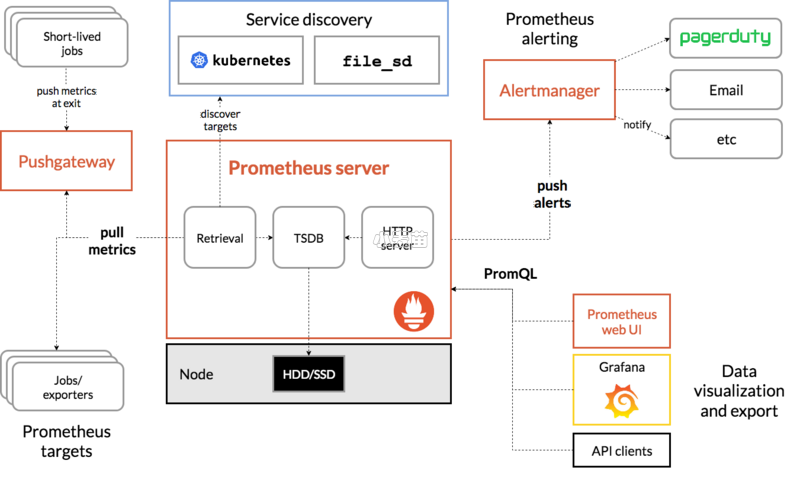

Prometheus是由SoundCloud开发的开源监控报警系统和时序列数据库(TSDB),使用Go语言开发。Prometheus目前在开源社区相当活跃。Prometheus性能也足够支撑上万台规模的集群。其架构图如下:

- Prometheus Server, 负责从 Exporter 拉取和存储监控数据,并提供一套灵活的查询语言(PromQL)供用户使用。

- Exporter, 负责收集目标对象(host, container…)的性能数据,并通过 HTTP 接口供 Prometheus Server 获取。

- 可视化组件,监控数据的可视化展现对于监控方案至关重要。以前 Prometheus 自己开发了一套工具,不过后来废弃了,因为开源社区出现了更为优秀的产品 Grafana。Grafana 能够与 Prometheus 无缝集成,提供完美的数据展示能力。

- Alertmanager,用户可以定义基于监控数据的告警规则,规则会触发告警。一旦 Alermanager 收到告警,会通过预定义的方式发出告警通知。支持的方式包括 Email、PagerDuty、Webhook 等.

2、Grafana简介

Grafana是一个跨平台的开源的度量分析和可视化工具,可以通过将采集的数据查询然后可视化的展示,并及时通知。它主要有以下六大特点:

- 1、展示方式:快速灵活的客户端图表,面板插件有许多不同方式的可视化指标和日志,官方库中具有丰富的仪表盘插件,比如热图、折线图、图表等多种展示方式;

- 2、数据源:Graphite,InfluxDB,OpenTSDB,Prometheus,Elasticsearch,CloudWatch和KairosDB等;

- 3、通知提醒:以可视方式定义最重要指标的警报规则,Grafana将不断计算并发送通知,在数据达到阈值时通过Slack、PagerDuty等获得通知;

- 4、混合展示:在同一图表中混合使用不同的数据源,可以基于每个查询指定数据源,甚至自定义数据源;

- 5、注释:使用来自不同数据源的丰富事件注释图表,将鼠标悬停在事件上会显示完整的事件元数据和标记;

- 6、过滤器:Ad-hoc过滤器允许动态创建新的键/值过滤器,这些过滤器会自动应用于使用该数据源的所有查询。

Greenplum监控的实现

Greenplum的监控可类似于PostgreSQL来实现,但又存在差异,不同点在于:

- 要实现一个Greenplum的Exporter指标采集器;

- 使用Grafana绘制一个可视化状态图;

- 基于Prometheus配置报警规则;

1、Greenplum的Exporter指标采集器

这里类比PostgreSQL数据库的Exporter实现方法,实现了一个Greenplum的Exporter,项目地址为:

https://github.com/tangyibo/greenplum_exporter

在greenplum_expoter里主要扩展了实现了客户连接信息、账号连接信息、Segment存储信息、集群节点同步状态、数据库锁监控等相关指标,具体指标如下:

2、支持的监控指标

| No. | 指标名称 | 类型 | 标签组 | 度量单位 | 指标描述 | 数据源获取方法 | GP版本 |

|---|---|---|---|---|---|---|---|

| 1 | greenplum_cluster_state | Gauge | version; master(master主机名);standby(standby主机名) | boolean | gp 可达状态 ?:1→ 可用;0→ 不可用 | SELECT count(*) from gp_dist_random('gp_id'); select version(); SELECT hostname from gp_segment_configuration where content=-1 and role='p'; | ALL |

| 2 | greenplum_cluster_uptime | Gauge | - | int | 启动持续的时间 | select extract(epoch from now() - pg_postmaster_start_time()); | ALL |

| 3 | greenplum_cluster_sync | Gauge | - | int | Master同步Standby状态? 1→ 正常;0→ 异常 | SELECT count(*) from pg_stat_replication where state='streaming' | ALL |

| 4 | greenplum_cluster_max_connections | Gauge | - | int | 最大连接个数 | show max_connections; show superuser_reserved_connections; | ALL |

| 5 | greenplum_cluster_total_connections | Gauge | - | int | 当前连接个数 | select count() total, count() filter(where current_query='') idle, count() filter(where current_query<>'') active, count() filter(where current_query<>'' and not waiting) running, count(*) filter(where current_query<>'' and waiting) waiting from pg_stat_activity where procpid <> pg_backend_pid(); | ALL |

| 6 | greenplum_cluster_idle_connections | Gauge | - | int | idle连接数 | 同上 | ALL |

| 7 | greenplum_cluster_active_connections | Gauge | - | int | active query | 同上 | ALL |

| 8 | greenplum_cluster_running_connections | Gauge | - | int | query executing | 同上 | ALL |

| 9 | greenplum_cluster_waiting_connections | Gauge | - | int | query waiting execute | 同上 | ALL |

| 10 | greenplum_node_segment_status | Gauge | hostname; address; dbid; content; preferred_role; port; replication_port | int | segment的状态status: 1(U)→ up; 0(D)→ down | select * from gp_segment_configuration; | ALL |

| 11 | greenplum_node_segment_role | Gauge | hostname; address; dbid; content; preferred_role; port; replication_port | int | segment的role角色: 1(P)→ primary; 2(M)→ mirror | 同上 | ALL |

| 12 | greenplum_node_segment_mode | Gauge | hostname; address; dbid; content; preferred_role; port; replication_port | int | segment的mode:1(S)→ Synced; 2(R)→ Resyncing; 3(C)→ Change Tracking; 4(N)→ Not Syncing | 同上 | ALL |

| 13 | greenplum_node_segment_disk_free_mb_size | Gauge | hostname | MB | segment主机磁盘空间剩余大小(MB) | SELECT dfhostname as segment_hostname,sum(dfspace)/count(dfspace)/(1024*1024) as segment_disk_free_gb from gp_toolkit.gp_disk_free GROUP BY dfhostname | ALL |

| 14 | greenplum_cluster_total_connections_per_client | Gauge | client | int | 每个客户端的total连接数 | select usename, count() total, count() filter(where current_query='') idle, count(*) filter(where current_query<>'') active from pg_stat_activity group by 1; | ALL |

| 15 | greenplum_cluster_idle_connections_per_client | Gauge | client | int | 每个客户端的idle连接数 | 同上 | ALL |

| 16 | greenplum_cluster_active_connections_per_client | Gauge | client | int | 每个客户端的active连接数 | 同上 | ALL |

| 17 | greenplum_cluster_total_online_user_count | Gauge | - | int | 在线账号数 | 同上 | ALL |

| 18 | greenplum_cluster_total_client_count | Gauge | - | int | 当前所有连接的客户端个数 | 同上 | ALL |

| 19 | greenplum_cluster_total_connections_per_user | Gauge | usename | int | 每个账号的total连接数 | select client_addr, count() total, count() filter(where current_query='') idle, count(*) filter(where current_query<>'') active from pg_stat_activity group by 1; | ALL |

| 20 | greenplum_cluster_idle_connections_per_user | Gauge | usename | int | 每个账号的idle连接数 | 同上 | ALL |

| 21 | greenplum_cluster_active_connections_per_user | Gauge | usename | int | 每个账号的active连接数 | 同上 | ALL |

| 22 | greenplum_cluster_config_last_load_time_seconds | Gauge | - | int | 系统配置加载时间 | SELECT pg_conf_load_time() | Only GPOSS6 and GPDB6 |

| 23 | greenplum_node_database_name_mb_size | Gauge | dbname | MB | 每个数据库占用的存储空间大小 | SELECT dfhostname as segment_hostname,sum(dfspace)/count(dfspace)/(1024*1024) as segment_disk_free_gb from gp_toolkit.gp_disk_free GROUP BY dfhostname | ALL |

| 24 | greenplum_node_database_table_total_count | Gauge | dbname | - | 每个数据库内表的总数量 | SELECT count(*) as total from information_schema.tables where table_schema not in ('gp_toolkit','information_schema','pg_catalog'); | ALL |

| 25 | greenplum_exporter_total_scraped | Counter | - | int | - | - | ALL |

| 26 | greenplum_exporter_total_error | Counter | - | int | - | - | ALL |

| 27 | greenplum_exporter_scrape_duration_second | Gauge | - | int | - | - | ALL |

| 28 | greenplum_server_users_name_list | Gauge | - | int | 用户总数 | SELECT usename from pg_catalog.pg_user; | ALL |

| 29 | greenplum_server_users_total_count | Gauge | - | int | 用户明细 | 同上 | ALL |

| 30 | greenplum_server_locks_table_detail | Gauge | pid;datname;usename;locktype;mode;application_name;state;lock_satus;query | int | 锁信息 | SELECT * from pg_locks | ALL |

| 31 | greenplum_server_database_hit_cache_percent_rate | Gauge | - | float | 缓存命中率 | select sum(blks_hit)/(sum(blks_read)+sum(blks_hit))*100 from pg_stat_database; | ALL |

| 32 | greenplum_server_database_transition_commit_percent_rate | Gauge | - | float | 事务提交率 | select sum(xact_commit)/(sum(xact_commit)+sum(xact_rollback))*100 from pg_stat_database; | ALL |

3、使用Grafana绘制一个可视化状态图

根据以上监测指标,即可使用Grafana配置图像了,具体内容请见:

https://github.com/tangyibo/greenplum_exporter/blob/master/grafana/greenplum_dashboard.json

Greenplum监控安装实现

该章节里讲述在CentOS7操作系统环境下的安装过程。

安装Promethues

下载地址:

https://prometheus.io/download/

https://github.com/prometheus/prometheus

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | docker run -d --name lhrgpPromethues -h lhrgpPromethues \ --net=lhrnw --ip 172.72.6.50 \ -p 2222:22 -p 23389:3389 -p 29090:9090 -p 29093:9093 -p 23000:3000 -p 29297:9297 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ lhrbest/lhrcentos76:9.0 \ /usr/sbin/init docker exec -it lhrgpPromethues bash cd /soft -- wget https://ghproxy.com/https://github.com/prometheus/prometheus/releases/download/v2.42.0/prometheus-2.42.0.linux-amd64.tar.gz wget https://github.com/prometheus/prometheus/releases/download/v2.42.0/prometheus-2.42.0.linux-amd64.tar.gz tar -zxvf prometheus-2.42.0.linux-amd64.tar.gz -C /usr/local/ ln -s /usr/local/prometheus-2.42.0.linux-amd64 /usr/local/prometheus ln -s /usr/local/prometheus/prometheus /usr/local/bin/prometheus -- 可以直接运行,也可以创建systemd服务 prometheus --config.file=/usr/local/prometheus/prometheus.yml --storage.tsdb.path=/usr/local/prometheus/data/ --web.enable-lifecycle --storage.tsdb.retention.time=60d & lsof -i:9090 netstat -tulnp | grep 9090 http://192.168.8.8:29090 |

--web.enable-lifecycle 加上此参数可以远程热加载配置文件,无需重启prometheus,调用指令是curl -X POST http://ip:9090/-/reload

-- storage.tsdb.retention.time 数据默认保存时间为15天,启动时加上此参数可以控制数据保存时间

创建Systemd服务

1 2 3 4 5 6 7 8 9 10 11 12 13 | cat > /etc/systemd/system/prometheus.service <<EOF [Unit] Description=prometheus After=network.target [Service] Type=simple User=root ExecStart=/usr/local/prometheus/prometheus --config.file=/usr/local/prometheus/prometheus.yml --storage.tsdb.path=/usr/local/prometheus/data/ --web.enable-lifecycle --storage.tsdb.retention.time=60d Restart=on-failure [Install] WantedBy=multi-user.target EOF |

启动Prometheus

1 2 3 | systemctl daemon-reload systemctl start prometheus systemctl status prometheus |

5、访问WEB界面

访问如下地址以检测验证成功安装:

安装Greenplum-Expoter

- Github: https://github.com/tangyibo/greenplum_exporter

- Gitee: https://gitee.com/inrgihc/greenplum_exporter

1 2 3 4 5 6 7 8 9 10 11 12 13 | wget https://github.com/tangyibo/greenplum_exporter/releases/download/v1.1/greenplum_exporter-1.1-rhel7.x86_64.rpm rpm -ivh greenplum_exporter-1.1-rhel7.x86_64.rpm echo 'GPDB_DATA_SOURCE_URL=postgres://gpadmin:lhr@172.72.6.40:5432/postgres?sslmode=disable' > /usr/local/greenplum_exporter/etc/greenplum.conf -- postgres://[数据库连接账号,必须为gpadmin]:[账号密码,即gpadmin的密码]@[数据库的IP地址]:[数据库端口号]/[数据库名称,必须为postgres]?[参数名]=[参数值]&[参数名]=[参数值] systemctl enable greenplum_exporter systemctl start greenplum_exporter systemctl status greenplum_exporter netstat -tulnp | grep 9297 curl http://172.72.6.50:9297/metrics |

说明:

1、该Exporter支持Greenplum V5.x及Greenplum V6.x等版本。

2、软件默认安装在: /usr/local/greenplum_exporter