合 MySQL之Navicat、into outfile、mysql命令、mysqldump、mysqlpump、mydumper等导出导入文本数据(csv、txt、sql等)

Tags: MySQL数据迁移逻辑导出导入mysqldumpcsv文件txt文件文本导出文本导入文本导出导入into outfileLoad Datamydumpermysqlpump

文章目录[隐藏]

我的总结

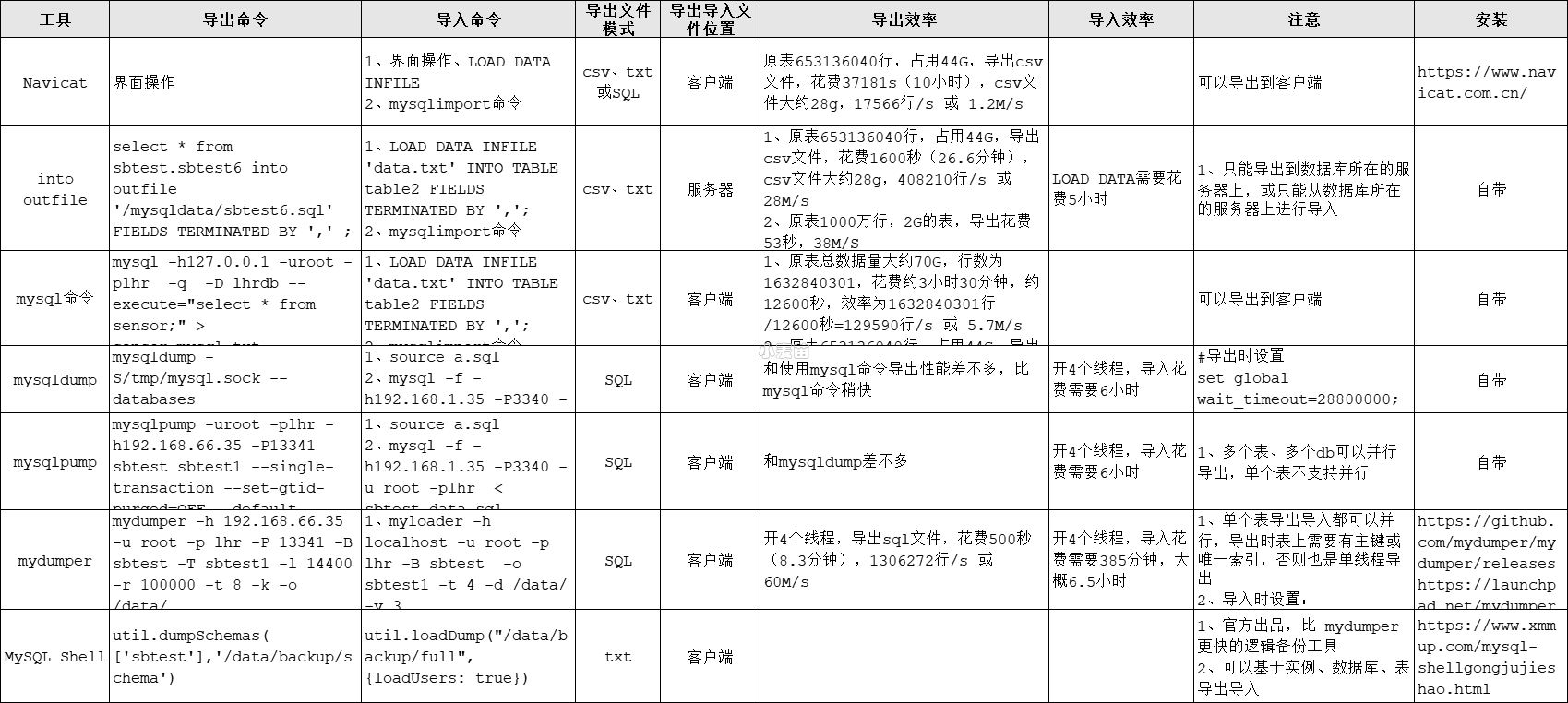

Navicat、into outfile、mysql命令、mysqldump、mysqlpump、mydumper导出导入数据

| 工具 | 导出命令 | 导入命令 | 导出文件模式 | 导出导入文件位置 | 导入效率 |

|---|---|---|---|---|---|

| Navicat | 界面操作 | 1、界面操作、LOAD DATA INFILE 2、mysqlimport命令 | csv、txt或SQL | 客户端 | |

| into outfile | select * from sbtest.sbtest6 into outfile '/mysqldata/sbtest6.sql' FIELDS TERMINATED BY ',' ; | 1、LOAD DATA INFILE 'data.txt' INTO TABLE table2 FIELDS TERMINATED BY ','; 2、mysqlimport命令 | csv、txt | 服务器 | LOAD DATA需要花费5小时 |

| mysql命令 | mysql -h127.0.0.1 -uroot -plhr -q -D lhrdb --execute="select * from sensor;" > sensor_mysql.txt | 1、LOAD DATA INFILE 'data.txt' INTO TABLE table2 FIELDS TERMINATED BY ','; 2、mysqlimport命令 | csv、txt | 客户端 | |

| mysqldump | mysqldump -S/tmp/mysql.sock --databases business_db_jingbain --set-gtid-purged=OFF --tables sensor > sensor_mysqldump.sql | 1、source a.sql 2、mysql -f -h192.168.1.35 -P3340 -u root -plhr < sbtest_data.sql | SQL | 客户端 | 开4个线程,导入花费需要6小时 |

| mysqlpump | mysqlpump -uroot -plhr -h192.168.66.35 -P13341 sbtest sbtest1 --single-transaction --set-gtid-purged=OFF --default-parallelism=4 > /bk/sbtest_sbtest1.sql | 1、source a.sql 2、mysql -f -h192.168.1.35 -P3340 -u root -plhr < sbtest_data.sql | SQL | 客户端 | 开4个线程,导入花费需要6小时 |

| mydumper | mydumper -h 192.168.66.35 -u root -p lhr -P 13341 -B sbtest -T sbtest1 -l 14400 -r 100000 -t 8 -k -o /data/ | 1、myloader -h localhost -u root -p lhr -B sbtest -o sbtest1 -t 4 -d /data/ -v 3 2、source a.sql 3、mysql -f -h192.168.1.35 -P3340 -u root -plhr < sbtest_data.sql | SQL | 客户端 | 开4个线程,导入花费需要385分钟,大概6.5小时 |

| MySQL Shell | util.dumpSchemas(['sbtest'],'/data/backup/schema') | util.loadDump("/data/backup/full",{loadUsers: true}) | txt | 客户端 |

实例SQL

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 | -- 总数据量大约70G,行数为1632840301 1、Navicat 导出26G后报错 效率:505308501行/41421s=12200行/s 或 1.7M/s -- 2、使用mysql导出,花费约3小时30分钟,约12600秒,效率为1632840301行/12600秒=129590行/s 或 5.7M/s [root@OCPLHR data]# date Fri Dec 13 09:03:47 CST 2019 [root@OCPLHR data]# mysql -h192.168.1.35 -uroot -pLHR -D business_db_jingbain --execute="select * from sensor;" -q > sensor_mysql.txt [root@OCPLHR data]# date Fri Dec 13 12:32:32 CST 2019 [root@OCPLHR data]# ll total 71000516 -rw-r--r-- 1 root root 72633519676 Dec 13 12:32 sensor.txt [root@OCPLHR data]# ll -h total 68G -rw-r--r-- 1 root root 68G Dec 13 12:32 sensor.txt 注意:mysql -h192.168.1.1 -uroot -p123 -D dbxx --execute="select * from XXT;" > sensor111.txt 其中XXT表一共70G,如果用这种方式导出数据,那么一定需要加上-q参数,否则这个命令会把内存撑爆。先耗内存,然后耗swap空间,直到OS夯住。 -q, --quick Don't cache result, print it row by row. This may slow down the server if the output is suspended. Doesn't use history file. -- 3、使用mysqldump导出,花费约200分钟,基本和mysql导出性能差不多 /var/lib/mysql57/mysql5719/bin/mysqldump -h192.168.1.35 -uroot -pLHR --databases business_db_jingbain --set-gtid-purged=OFF --tables sensor > sensor_mysqldump.sql [root@LHRDB data]# time /var/lib/mysql57/mysql5719/bin/mysqldump -h192.168.1.35 -uroot -pLHR --databases business_db_jingbain --set-gtid-purged=OFF --tables sensor > sensor_mysqldump.dmp mysqldump: [Warning] Using a password on the command line interface can be insecure. real 200m8.739s user 38m21.922s sys 12m21.432s [root@LHRDB data]# ll total 77311652 -rw-r--r-- 1 root root 79167122033 Dec 13 18:00 sensor_mysqldump.dmp [root@LHRDB data]# ll -h total 74G -rw-r--r-- 1 root root 74G Dec 13 18:00 sensor_mysqldump.dmp -- 4、使用mysqlpump导出 mysqlpump -h192.168.1.35 -uroot -pLHR database business_db_jingbain sensor --set-gtid-purged=OFF > sensor_mysqlpump.sql /var/lib/mysql57/mysql5719/bin/mysqldump -S/var/lib/mysql57/mysql5719/data57193310/mysql57193310.sock business_db_jingbain sensor --set-gtid-purged=OFF > sensor_mysqlpump.sql -- 5、使用mydumper导出,开10个线程,花费约2小时,效率为1632840301行/3600秒=453566行/s 或 21M/s mydumper -h 192.168.1.35 -u root -p LHR -B business_db_jingbain -T sensor -l 14400 -r 150000000 -t 12 -k -o /data/ myloader -h localhost -u root -p lhr -B business_db_jingbain -o sensor -t 12 -d /data/ -v 3 mydumper -S /var/lib/mysql57/mysql5719/data57193310/mysql57193310.sock -B business_db_jingbain -T sensor -l 14400 -r 60000000 -t 12 -k -o /data/datatmp/ [root@LHRDB data]# mydumper -h 192.168.1.35 -u root -p LHR -B business_db_jingbain -T sensor -l 14400 -r 150000000 -t 12 -k -o /data/ ** (mydumper:28001): WARNING **: Executing in no-locks mode, snapshot will notbe consistent [root@LHRDB data]# ll -h total 74G -rw-r--r-- 1 root root 82 Dec 16 12:39 business_db_jingbain-schema-create.sql -rw-r--r-- 1 root root 25M Dec 16 12:40 business_db_jingbain.sensor.00000.sql -rw-r--r-- 1 root root 24M Dec 16 12:40 business_db_jingbain.sensor.00001.sql -rw-r--r-- 1 root root 21M Dec 16 12:40 business_db_jingbain.sensor.00002.sql -rw-r--r-- 1 root root 23M Dec 16 12:40 business_db_jingbain.sensor.00003.sql -rw-r--r-- 1 root root 24M Dec 16 12:40 business_db_jingbain.sensor.00004.sql -rw-r--r-- 1 root root 20M Dec 16 12:40 business_db_jingbain.sensor.00005.sql -rw-r--r-- 1 root root 21M Dec 16 12:40 business_db_jingbain.sensor.00006.sql -rw-r--r-- 1 root root 22M Dec 16 12:40 business_db_jingbain.sensor.00007.sql -rw-r--r-- 1 root root 21M Dec 16 12:40 business_db_jingbain.sensor.00008.sql -rw-r--r-- 1 root root 21M Dec 16 12:40 business_db_jingbain.sensor.00009.sql -rw-r--r-- 1 root root 467 Dec 16 12:39 business_db_jingbain.sensor-schema.sql -rw-r--r-- 1 root root 150 Dec 16 12:39 metadata.partial [root@LHRDB data]# ll -h total 134G -rw-r--r-- 1 root root 82 Dec 16 12:39 business_db_jingbain-schema-create.sql -rw-r--r-- 1 root root 5.9G Dec 16 14:06 business_db_jingbain.sensor.00000.sql -rw-r--r-- 1 root root 5.6G Dec 16 14:06 business_db_jingbain.sensor.00001.sql -rw-r--r-- 1 root root 5.9G Dec 16 14:06 business_db_jingbain.sensor.00002.sql -rw-r--r-- 1 root root 6.1G Dec 16 14:06 business_db_jingbain.sensor.00003.sql -rw-r--r-- 1 root root 6.2G Dec 16 14:06 business_db_jingbain.sensor.00004.sql -rw-r--r-- 1 root root 6.0G Dec 16 14:06 business_db_jingbain.sensor.00005.sql -rw-r--r-- 1 root root 5.8G Dec 16 14:06 business_db_jingbain.sensor.00006.sql -rw-r--r-- 1 root root 6.6G Dec 16 14:06 business_db_jingbain.sensor.00007.sql -rw-r--r-- 1 root root 6.0G Dec 16 14:06 business_db_jingbain.sensor.00008.sql -rw-r--r-- 1 root root 5.8G Dec 16 14:06 business_db_jingbain.sensor.00009.sql -rw-r--r-- 1 root root 467 Dec 16 12:39 business_db_jingbain.sensor-schema.sql -rw-r--r-- 1 root root 150 Dec 16 12:39 metadata [root@LHRDB data]# ll -h total 149G -rw-r--r-- 1 root root 82 Dec 16 12:39 business_db_jingbain-schema-create.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:30 business_db_jingbain.sensor.00000.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:32 business_db_jingbain.sensor.00001.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:30 business_db_jingbain.sensor.00002.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:28 business_db_jingbain.sensor.00003.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:27 business_db_jingbain.sensor.00004.sql -rw-r--r-- 1 root root 7.5G Dec 16 14:30 business_db_jingbain.sensor.00005.sql -rw-r--r-- 1 root root 7.6G Dec 16 14:32 business_db_jingbain.sensor.00006.sql -rw-r--r-- 1 root root 7.7G Dec 16 14:23 business_db_jingbain.sensor.00007.sql -rw-r--r-- 1 root root 7.7G Dec 16 14:30 business_db_jingbain.sensor.00008.sql -rw-r--r-- 1 root root 7.7G Dec 16 14:32 business_db_jingbain.sensor.00009.sql -rw-r--r-- 1 root root 467 Dec 16 12:39 business_db_jingbain.sensor-schema.sql -rw-r--r-- 1 root root 188 Dec 16 14:32 metadata ------------ sqlldr导入 CREATE TABLE xxt.sensor ( id int , record_date date , value varchar2(300), sid int , gid int ) tablespace xxt nologging; options(SKIP=1,errors=1000) --options(SKIP=1,ROWS=1000,errors=1000) UNRECOVERABLE load data LENGTH CHARACTER infile '/data/sensor_mysql.txt' APPEND into table xxt.sensor fields terminated by x'09' trailing nullcols ( id, record_date, decode(value,'NULL','') , sid , gid ) sqlldr xxt/lhr control= xxt.ctl parallel=y log='log.txt' bad='bad.bad' direct=true readsize=510430400 streamsize=510430400 multithreading=y ----------- 结果 Bind array size not used in direct path. Column array rows : 5000 Stream buffer bytes:16777216 Read buffer bytes:510430400 Total logical records skipped: 1 Total logical records read: 1632840300 Total logical records rejected: 0 Total logical records discarded: 0 Total stream buffers loaded by SQL*Loader main thread: 326640 Total stream buffers loaded by SQL*Loader load thread: 0 Run began on Mon Dec 16 11:32:18 2019 Run ended on Mon Dec 16 13:05:23 2019 Elapsed time was: 01:33:04.33 CPU time was: 01:19:30.76 ------------------- select count(*) from sensor; --653136040行,44G csv文件,花费37181s,csv文件大约28g ------------- mysqldump mysqldump -uroot -plhr -h192.168.1.35 --single-transaction --hex-blob --set-gtid-purged=OFF --databases jl_hotel > jl_hotel.sql -- http://blog.itpub.net/26736162/viewspace-2686075/ mysqldump --databases jl_hotel --single-transaction --order-by-primary --hex-blob --no-data --routines --events --set-gtid-purged=OFF -uroot -plhr -h192.168.1.35 -P3306 |sed -e 's/DEFINER[ ]*=[ ]*[^*]*\*/\*/' -e 's/DEFINER[ ]*=.*FUNCTION/FUNCTION/' -e 's/DEFINER[ ]*=.*PROCEDURE/PROCEDURE/' -e 's/DEFINER[ ]*=.*TRIGGER/TRIGGER/' -e 's/DEFINER[ ]*=.*EVENT/EVENT/' -e 's/DEFINER[ ]*=.*SQL SECURITY DEFINER/SQL SECURITY DEFINER/' > jl_hotel.sql mysqldump --databases jl_hotel --single-transaction --hex-blob --set-gtid-purged=OFF -uroot -plhr -h192.168.1.35 -P3306 > jl_hotel_data.sql mysqldump --databases jl_hotel -uroot -plhr -h192.168.1.35 -P3306 > jl_hotel_data.sql mysql -f -h192.168.1.35 -P 3306 -u root -plhr < jl_hotel.sql [root@lhrcentos76 mysql]# mysqldump --databases jl_hotel --single-transaction --hex-blob --set-gtid-purged=OFF --no-create-info --skip-triggers -uroot -plhr -h192.168.1.35 -P3306 -r jl_hotel_data.sql mysqldump: [Warning] Using a password on the command line interface can be insecure. mysqldump: Error 2013: Lost connection to MySQL server during query when dumping table `sys_api_log` at row: 112614 mysqldump导出比较慢,报错:mysqldump: Error 2013: Lost connection to MySQL server during query when dumping table `sys_api_log` at row: 112614 ---导出时设置 set global wait_timeout=28800000; set global net_read_timeout=28800; set global net_write_timeout=28800; set global max_allowed_packet=2147483648; ---导入 set sql_log_bin=0; set global innodb_flush_log_at_trx_commit = 2; set global sync_binlog = 20000; set global max_allowed_packet=100000000; set global net_buffer_length=100000; set global interactive_timeout=28800000; set global wait_timeout=28800000; mysqldump --single-transaction --hex-blob --set-gtid-purged=OFF -uroot -plhr -h192.168.1.35 -P3350 --databases sbtest > sbtest_data.sql mysql -f -h192.168.1.35 -P3340 -u root -plhr < sbtest_data.sql mysql -f -uroot -plhr -h192.168.1.35 -P3309 -D ehr_dev < C:\Users\lhrxxt\Desktop\ehr_dev_datafull.sql --- https://support.huaweicloud.com/bestpractice-rds/rds_02_0010.html --- 触发器 set GLOBAL log_bin_trust_function_creators=on; DROP FUNCTION if exists rand_string; delimiter // CREATE DEFINER=`root`@`%` FUNCTION lhrdb.`rand_string`(n INT) RETURNS varchar(255) CHARSET utf8 BEGIN DECLARE chars_str varchar(100) DEFAULT 'abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789'; DECLARE return_str varchar(255) DEFAULT ''; DECLARE i INT DEFAULT 0; WHILE i < n DO SET return_str = concat(return_str,substring(chars_str , FLOOR(1 + RAND()*62 ),1)); SET i = i +1; END WHILE; RETURN return_str; END;// delimiter ; select lhrdb.rand_string(5); -- 存储过程 delimiter // DROP PROCEDURE IF EXISTS mock_isam// CREATE PROCEDURE mock_isam (IN rowCount int) BEGIN DECLARE insertCount int; SET insertCount = 0; DROP TABLE IF EXISTS `isam_table`; /*删掉之前创建的表,因为下面要建这个名字的表*/ /*自己按需求修改以下建表语句构造需要的表*/ CREATE TABLE `isam_table` ( `id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(20) NOT NULL, `age` tinyint(3) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8 AUTO_INCREMENT=1; START TRANSACTION; loopHandler : LOOP /*插入数据*/ INSERT INTO `isam_table`(`name`,`age`) VALUES( rand_string(20), CEIL(RAND() * 110) ); SET insertCount = insertCount + 1; IF (insertCount >= rowCount) THEN LEAVE loopHandler; END IF; END LOOP loopHandler; COMMIT; END // delimiter ; delimiter // DROP PROCEDURE IF EXISTS mock_innodb// CREATE PROCEDURE mock_innodb (IN rowCount int) BEGIN DECLARE insertCount int; SET insertCount = 0; DROP TABLE IF EXISTS `innodb_table`; /*删掉之前创建的表,因为下面要建这个名字的表*/ /*自己按需求修改以下建表语句构造需要的表*/ CREATE TABLE `innodb_table` ( `id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(20) NOT NULL, `age` tinyint(3) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=innodb DEFAULT CHARSET=utf8 AUTO_INCREMENT=1; START TRANSACTION; loopHandler : LOOP /*插入数据*/ INSERT INTO `innodb_table`(`name`,`age`) VALUES( rand_string(20), CEIL(RAND() * 110) ); SET insertCount = insertCount + 1; IF (insertCount >= rowCount) THEN LEAVE loopHandler; END IF; END LOOP loopHandler; COMMIT; END // delimiter ; call mock_innodb(20000); -- 66s call mock_isam(20000); -- 108s select * from innodb_table; select * from isam_table; select * from mysql.proc where db = 'lhrdb' and `type` = 'PROCEDURE' ; //存储过程 select * from mysql.proc where db = 'lhrdb' and `type` = 'FUNCTION' ; //函数 -- 触发器 create table lhrdb.time (time varchar(100)); CREATE TRIGGER lhrdb.trig1 AFTER INSERT ON isam_table FOR EACH ROW INSERT INTO time VALUES(NOW()); SHOW TRIGGERS from lhrdb; select * from information_schema.`TRIGGERS` where trigger_schema='lhrdb' ; -- event事件 drop table if exists lhrdb.events_list; create table lhrdb.events_list(id int PRIMARY key auto_increment, event_name varchar(20) not null, event_started timestamp not null); drop event lhrdb.event_minute; create event lhrdb.event_minute on schedule every 10 minute do insert into lhrdb.events_list(event_name,event_started) values('event_now', now()); set global event_scheduler =1; show processlist; show events; select * from information_schema.`EVENTS` where event_schema='lhrdb' ; select * from events_list; -- 视图 create or replace view vw_pro_tri_fun_event_lhr as select 'EVENTS' type,event_name NAME,DEFINER from information_schema.`EVENTS` where event_schema='lhrdb' union all select 'TRIGGER',trigger_name,DEFINER from information_schema.`TRIGGERS` where trigger_schema='lhrdb' union all select 'PROCEDURE',NAME,DEFINER from mysql.proc where db = 'lhrdb' and `type` = 'PROCEDURE' union all select 'FUNCTION',NAME,DEFINER from mysql.proc where db = 'lhrdb' and `type` = 'FUNCTION' union all select 'VIEW',TABLE_name,DEFINER from information_schema.VIEWS where TABLE_SCHEMA = 'lhrdb' ; select * from vw_pro_tri_fun_event_lhr; |

mysqldump

参考:https://www.dbaup.com/shiyongmysqldumpqianyileimysqlshujuku.html

其它方案

待测方案:mysqldump、mydumper、select outfile 语句、Util.dumpTables 、Util.exportTable。

环境配置信息

| 配置项 | 说明 |

|---|---|

| MySQL 版本 | 5.7.39 |

| 磁盘随机读写 | 100 MiB/sec |

| 测试表名 | test.t_order_info |

| 行数 | 1000W |

| 字段数 | 6 |

建表语句

1 2 3 4 5 6 7 8 9 10 | CREATE TABLE `t_order_info` ( `ID` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '自增主键ID', `order_no` varchar(64) NOT NULL DEFAULT '0000' COMMENT '订单编号', `order_status` varchar(2) NOT NULL DEFAULT '01' COMMENT '订单状态: 00-异常、01-待处理、02-进行中、03-已完成', `flag` tinyint(4) NOT NULL DEFAULT '1' COMMENT '删除标识: 1-正常、0-逻辑删除', `create_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间', `modify_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间', PRIMARY KEY (`ID`), UNIQUE KEY `IDX_ORDER_NO` (`order_no`) ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='订单表' |

导出文件

- 包含数据结构和数据的 备份文件 (mysqldump、mydumper、Util.dumpTables)

- 只包含数据的 数据文件 (select outfile、Util.exportTable)

导出导入命令

| 导出 | 导入 |

|---|---|

| mysqldump | source 或 mysql< xxx.sql |

| mydumper | myloader |

| select outfile | load data |

| Util.dumpTables | Util.loadDump |

| Util.exportTable | Util.importTable |

方案测试

测试首先考虑的是 提升导入效率,并新增了 MySQL Shell 的使用。

mysqldump

单表导出(备份文件)

1 | mysqldump --default-character-set=utf8mb4 --master-data=2 --single-transaction --set-gtid-purged=off --hex-blob --tables test t_order_info |

--master-data=2参数会在备份期间对所有表加锁FLUSH TABLES WITH READ LOCK,并执行SHOW MASTER STATUS语句以获取二进制日志信息。因此,在备份期间可能会影响数据库的并发性能。如果您不需要进行主从复制,则可以考虑不使用--master-data=2参数。--single-transaction参数用于在备份期间“使用事务来确保数据一致性”,从而避免在备份期间锁定表。[必须有]

备份文件

文件内容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | -- Table stricture for table `t_order_info` -- DROP TABLE IF EXISTS `t_order_info`; /*!40101 SET @saved_cs_client= @@character_set_client */; /*!49101 SET character_set_client = utf8 */; CREATE TABLE `t_order_info` ( `ID` bigint(2) unsigned NOT NULL AUTO_INCREMENT COMMENT '自增主键ID', `order_no` varchar(64) NOT NULL DEFAULT `0000` COMMENT '订单编号', `order_status` varchar(2) NOT NULL DEFAULT '01' COMMENT '订单状态: 80-异常、81-待处理、2-进行中、03-已完成', `flag` tinyint(4) NOT NULL DEFAULT '1' COMMENT '删除标识: 1-正常、0-逻辑删除', `create_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间', `modify_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间', PRIMARY KEY (`ID`), UNIOUE KEY `IDX_ORDER_NO` (`order no`) ) ENGINE=InnODB AUTO_INCREMENT=10129913 DEFAULT CHARSET=utf8m COMMENT='订单表'; /*!40101 SET character_set_client = @saved_cs_client */; -- -- Dumping data for table `t_order_info` -- LOCK TABLES `t_order_info` WRITE; /*!40000 ALTER TABLE `t_order_info` DISABLE KEYS */; |

文件内容解释:

- 没有建库语句,因为是单表备份。

- 有删除表,建立表的语句,小心导入目标库时,删除表的语句,造成数据误删。

- INSERT 语句没有字段名称,导入时表结构要一致。

- 导入过程中有

lock table write操作,导入过程中相关表不可写。 ALTER TABLE t_order_info DISABLE KEYS此语句将禁用该表的所有非唯一索引,这可以提高插入大量数据时的性能。 对应的文件末尾有ALTER TABLEt_order_infoENABLE KEYS;

用途,可以将备份文件中的数据导入自定义库,“文件内容解释”部分遇到的问题可以使用下面参数解决。

--no-create-info不包含建表语句(可以手动创建create table tablename like dbname.tablename;)--skip-add-drop-database不包含删库语句--skip-add-drop-table不包含删表语句--skip-add-locksINSERT 语句前不包含LOCK TABLES t_order_info WRITE;--complete-insertINSERT 语句中包含 列名称(新表的列有增加的时候)。

单表导出备份数据(只导出数据)。

1 2 3 4 | mysqldump --default-character-set=utf8mb4 --master-data=2 --single-transaction --set-gtid-purged=off --hex-blob --no-create-info --skip-add-drop-table --skip-add-locks --tables dbname tablename // 部分数据导出追加参数 --where="create_time>'2023-01-02'" |

导出单库中的某表为 CSV。

1 2 3 4 5 6 7 8 | // 可选不导出表结构, --no-create-info --skip-add-drop-database --skip-add-drop-table /data/mysql/3306/base/bin/mysqldump -uadmin -p123456 -P3306 -h127.0.0.1 --default-character-set=utf8mb4 --single-transaction --set-gtid-purged=OFF --triggers --routines --events --hex-blob --fields-terminated-by=',' --fields-enclosed-by='"' --lines-terminated-by='\n' -T /data/mysql/3306/tmp test //其中 test 后面也可以指定表名,不指定就是全库。 test t_order_info t_order_info01 其中 --single-transaction --set-gtid-purged=OFF --triggers --routines --events --hex-blob 为了防止提示,可选 |

小结

1G 的备份文件,测试结果如下:

- 使用

mysql< xxx.sql导入,耗时 5 分钟。 - 使用用

source xxx.sql导入, 耗时 10 分钟。

推荐第一种,都是单线程。

mydumper

- 版本 0.14.4

多线程导出

1 2 3 4 5 6 7 8 9 10 11 12 | mydumper -u admin -p 123456 -P 3306 -h 127.0.0.1 -t 8 --trx-consistency-only -G -E -R --skip-tz-utc --verbose=3 --compress --no-schemas --rows=1000000 -T test.t_order_info -o /backup // 导出时支持部分导出追加参数 --where="create_time>'2023-01-02'" // 文件输出 test01.t_order_info.00000.dat # 包含 CSV 数据 test01.t_order_info.00000.sql # 包含 LOAD DATA 语句 // 导入命令 LOAD DATA LOCAL INFILE '/data/mysql/3306/tmp/test01.t_order_info.00005.dat' REPLACE INTO TABLE `t_order_info` CHARACTER SET binary FIELDS TERMINATED BY ',' ENCLOSED BY '"' ESCAPED BY '\\' LINES STARTING BY '' TERMINATED BY '\n' (`ID`,`order_no`,`order_status`,`flag`,`create_time`,`modify_time`); |

- 多线程导入

1 2 3 4 5 6 7 | myloader -u admin -p 123456 -P 3306 -h 127.0.0.1 --enable-binlog -t 8 --verbose=3 -B test -d /backup // 导入主库时需要添加 --enable-binlog // 库名可以自定义 -B test |

小结

耗时 2 分钟,建议如下:

- 在数据量大于 50G 的场景中,更推荐 mydumper。

- 补充场景,支持导出 CSV,也支持

--where过滤。

1 | mydumper -u admin -p 123456 -P 3306 -h 127.0.0.1 -t 8 --trx-consistency-only -G -E -R --skip-tz-utc --verbose=3 --where="create_time>'2023-01-02'" --no-schemas --rows=1000000 --load-data --fields-terminated-by ',' --fields-enclosed-by '"' --lines-terminated-by '\n' -T test.t_order_info -o /backup |

导入命令同上,且可以按需手动进行 LOAD DATA。

SELECT OUTFILE 语句

Tips:适合于单表数据的导出,不支持多表。

导出命令,耗时 15 秒。

1 2 3 4 | SELECT * from test01.t_order_info INTO OUTFILE "/data/mysql/3306/tmp/t_order_info0630_full.csv" CHARACTER SET utf8mb4 FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '\'' LINES TERMINATED BY '\n'; // 带列名导出,导入时需添加 IGNORE 1 LINES; SELECT * INTO OUTFILE "/data/mysql/3306/tmp/t_order_info0630_full.csv" CHARACTER SET utf8mb4 FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '\'' LINES TERMINATED BY '\n' from (select 'id','order_no','order_status','flag','create_time','modify_time' union all select * from test01.t_order_info) b; |