原 在docker中模拟不同主机快速搭建GBase 8cV5集群环境

Tags: 原创高可用安装部署GBase分布式数据库GBase 8c

- 环境准备

- 申请环境

- 环境配置

- 增加swap空间并调整swap配置

- 所有节点安装依赖包

- 修改主机名

- 修改内核参数

- 所有节点创建用户

- 配置互信

- 解压安装包

- 开始安装

- 编辑集群部署文件 gbase8c.yml

- 执行安装脚本

- 安装日志位置

- 状态检查

- 数据库启停

- 停止数据库服务

- 启动数据库服务

- 连接和 SQL 测试

- 卸载集群

- 环境变量

- 修改密码

- 配置远程登录

- gbase 8c修改参数的命令

- 安装错误解决

- gaussDB state is Coredump

- error while loading shared libraries: libcjson.so.1

- Current gtm center group num 1 is out of range [0, 0]

- Rpc request failed:dn1_1 save node info

- OPENSSL_1_1_1 not defined in file libcrypto.so.1.1

- Exception: Failed to obtain host name. The cmd is hostname

- install or upgrade dependency {'patch' : Mone} failed

- 巡检脚本

- 总结

- 参考

环境准备

申请环境

宿主机:32g内存,8g swap,需要保证每台机器至少4g内存+8g swap,否则不能安装。。。

| IP | hostname | 角色 |

|---|---|---|

| 172.72.3.30 | gbase8c_1 | gha_server(高可用服务)、dcs(分布式配置存储)、gtm(全局事务管理)、coordinator(协调器) |

| 172.72.3.31 | gbase8c_2 | datanode(数据节点) 1 |

| 172.72.3.32 | gbase8c_3 | datanode(数据节点) 2 |

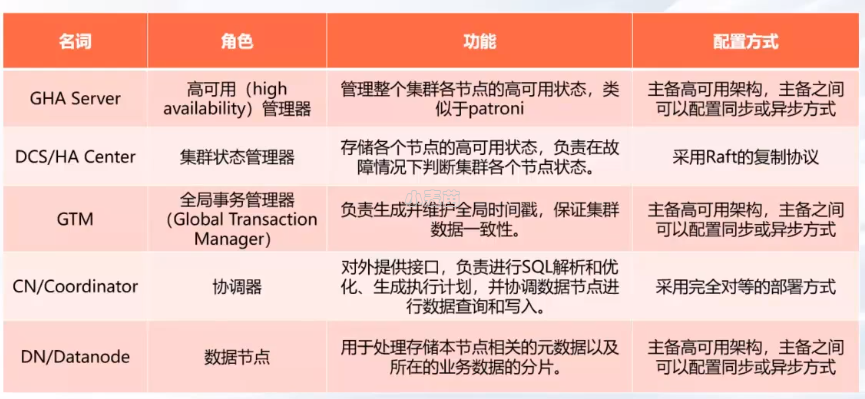

| 名词 | 角色 | 功能 | 配置方式 |

|---|---|---|---|

| GHA Server | 高可用(highavailability)管理器 | 管理整个集群各节点的高可用状态,类似于patroni | 主备高可用架,主备之间可以配置同步或异步方式 |

| DCS/HA Center | 集群状态管理器 | 存储各个节点的高可用状态,负责在故障情况下判断集群各个节点状态。 | 采用Raft的复制协议 |

| GTM | 全局事务管理器(Global TransactionManager) | 负责生成并维护全局时间戳,保证集群数据一致性 | 主备高可用架构,主备之间可以配置同步或异步方式 |

| CN/Coordinator | 协调器 | 对外提供接口,负责进行SQL解析和优化、生成执行计划,并协调数据节点进行数据查询和写入。 | 采用完全对等的部署方式 |

| DN/Datanode | 数据节点 | 用于处理存储本节点相关的元数据以及所在的业务数据的分片。 | 主备高可用架构,主备之间可以配置同步或异步方式 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | -- 网卡 docker network create --subnet=172.72.0.0/16 lhrnw docker rm -f gbase8c_1 docker run -itd --name gbase8c_1 -h gbase8c_1 \ --net=lhrnw --ip 172.72.3.30 \ -p 63330:5432 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ --add-host='gbase8c_1:172.72.3.30' \ --add-host='gbase8c_2:172.72.3.31' \ --add-host='gbase8c_3:172.72.3.32' \ lhrbest/lhrcentos76:9.0 \ /usr/sbin/init docker rm -f gbase8c_2 docker run -itd --name gbase8c_2 -h gbase8c_2 \ --net=lhrnw --ip 172.72.3.31 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ --add-host='gbase8c_1:172.72.3.30' \ --add-host='gbase8c_2:172.72.3.31' \ --add-host='gbase8c_3:172.72.3.32' \ lhrbest/lhrcentos76:9.0 \ /usr/sbin/init docker rm -f gbase8c_3 docker run -itd --name gbase8c_3 -h gbase8c_3 \ --net=lhrnw --ip 172.72.3.32 \ -v /sys/fs/cgroup:/sys/fs/cgroup \ --privileged=true \ --add-host='gbase8c_1:172.72.3.30' \ --add-host='gbase8c_2:172.72.3.31' \ --add-host='gbase8c_3:172.72.3.32' \ lhrbest/lhrcentos76:9.0 \ /usr/sbin/init docker cp GBase8cV5_S3.0.0B76_centos7.8_x86_64.tar.gz gbase8c_1:/soft/ docker cp sshUserSetup.sh gbase8c_1:/soft/ |

环境配置

增加swap空间并调整swap配置

若内存为4g或更小,则需要增加swap空间,并增加swappiness参数,否则内存耗尽,系统会很卡,导致集群状态不对:

1 2 3 4 5 6 7 8 9 10 11 12 13 | dd if=/dev/zero of=/root/swapfile bs=10M count=3200 chmod -R 0600 /root/swapfile mkswap /root/swapfile swapon /root/swapfile echo '/root/swapfile swap swap defaults 0 0' >> /etc/fstab swapon -s echo 20 > /proc/sys/vm/swappiness echo 'vm.swappiness=20' >> /etc/sysctl.conf cat /proc/sys/vm/swappiness cat /etc/sysctl.conf | grep swappiness |

每台机器内存至少4G,且需要配置swap 8G,否则会报错“Failed to initialize the memory protect for g_instance.attr.attr_storage.cstore_buffers (1024 Mbytes) or shared memory (4496 Mbytes) is larger.” 、“could not create shared memory segment: Cannot allocate memory”、“This error usually means that openGauss's request for a shared memory segment exceeded available memory or swap space, or exceeded your kernel's SHMALL parameter. You can either reduce the request size or reconfigure the kernel with larger SHMALL. To reduce the request size (currently 1841225728 bytes), reduce openGauss's shared memory usage, perhaps by reducing shared_buffers.”

1 | shared_buffers = 126MB |

也可以修改参数:

1 2 3 4 5 6 7 8 9 | sudo sed -i '/shared_buffers = 1GB/c shared_buffers = 256MB' /home/gbase/data/gtm/gtm1/postgresql.conf sudo sed -i '/shared_buffers = 1GB/c shared_buffers = 256MB' /home/gbase/data/coord/cn1/postgresql.conf sudo sed -i '/shared_buffers = 1GB/c shared_buffers = 256MB' /home/gbase/data/dn1/dn1_1/postgresql.conf sudo sed -i '/shared_buffers = 1GB/c shared_buffers = 256MB' /home/gbase/data/dn2/dn2_1/postgresql.conf sudo sed -i '/cstore_buffers = 1GB/c cstore_buffers = 32MB' /home/gbase/data/gtm/gtm1/postgresql.conf sudo sed -i '/cstore_buffers = 1GB/c cstore_buffers = 32MB' /home/gbase/data/coord/cn1/postgresql.conf sudo sed -i '/cstore_buffers = 1GB/c cstore_buffers = 32MB' /home/gbase/data/dn1/dn1_1/postgresql.conf sudo sed -i '/cstore_buffers = 1GB/c cstore_buffers = 32MB' /home/gbase/data/dn2/dn2_1/postgresql.conf |

所有节点安装依赖包

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | yum install -y libaio-devel flex bison ncurses-devel \ glibc-devel patch readline-devel bzip2 firewalld \ crontabs net-tools openssh-server openssh-clients which sshpass \ ntp chrony systemctl disable firewalld systemctl stop firewalld systemctl status firewalld -- 重启OS sed -i s/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config setenforce 0 getenforce -- 安装 ntp 组件或 chronyd 组件,确保集群各个节点之间的时间同步 systemctl unmask ntpd systemctl enable ntpd systemctl start ntpd systemctl status ntpd systemctl status ntpd systemctl disable chronyd systemctl stop chronyd systemctl status chronyd |

修改主机名

注意修改三个节点的 IP 地址,这里我使用如下三个 IP,并分别修改 hostname。

1 2 3 | node 1: hostnamectl set-hostname gbase8c_1.lhr.com node 2: hostnamectl set-hostname gbase8c_2.lhr.com node 3: hostnamectl set-hostname gbase8c_3.lhr.com |

docker的话可以不用管。

修改内核参数

1 2 3 4 5 6 7 8 9 | cat >> /etc/sysctl.conf <<"EOF" kernel.shmmax = 4398046511104 kernel.shmmni = 4096 kernel.shmall = 4000000000 kernel.sem = 32000 1024000000 500 32000 EOF sysctl -p |

若kernel.shmmax配置过小,会报共享内存相关的错误:

FATAL: could not create shared memory segment: Invalid argument

DETAIL: Failed system call was shmget(key=6666001, size=4714683328, 03600).

HINT: This error usually means that openGauss's request for a shared memory segment exceeded your kernel's SHMMAX parameter. You can either reduce the request size or reconfigure the kernel with larger SHMMAX. To reduce the request size (currently 4714683328 bytes), reduce openGauss's shared memory usage, perhaps by reducing shared_buffers.

If the request size is already small, it's possible that it is less than your kernel's SHMMIN parameter, in which case raising the request size or reconfiguring SHMMIN is called for.

The openGauss documentation contains more information about shared memory configuration.

所有节点创建用户

1 2 3 4 | useradd gbase echo "gbase:lhr" | chpasswd echo "gbase ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers |

配置互信

以root用户只在主节点操作:

1 2 3 4 | ./sshUserSetup.sh -user gbase -hosts "gbase8c_1 gbase8c_2 gbase8c_3" -advanced exverify –confirm -- 所有节点 chmod 600 /home/gbase/.ssh/config |

解压安装包

只在主节点操作,解压安装包 GBase8cV5_S3.0.0B76_centos7.8_x86_64.tar.gz:

1 2 3 4 5 6 | su - gbase mkdir -p /home/gbase/gbase_package cp /soft/GBase8cV5_S3.0.0B76_centos7.8_x86_64.tar.gz /home/gbase/gbase_package cd /home/gbase/gbase_package tar -zxvf GBase8cV5_S3.0.0B76_centos7.8_x86_64.tar.gz tar zxf GBase8cV5_S3.0.0B76_CentOS_x86_64_om.tar.gz |

示例: